Introduction to Routing GPT and Gemini for Commercial Impact

Table of Contents

- Introduction to Routing GPT and Gemini for Commercial Impact

- Designing a Tiered Model Strategy for GPT, Gemini, and Open Source

- Rules Based vs Judge LLM Routing Strategies

- Minimal API Routing Across GPT 5.2 and Gemini

- KPIs and Dashboards to Measure Routing ROI

- When Open Source Models Beat GPT and Gemini on Cost

- Productivity, Resilience, and Trade Offs vs Single Model Setups

- Model Portfolio Roles and Typical Savings Patterns

- Enterprise Adoption Patterns and Automation of Routing Decisions

- Conclusion and Next Steps

Routing GPT and Gemini models is about choosing the right AI engine for each job so you get maximum performance without burning your budget. For Australian businesses rolling out generative AI into products, support, or internal tools, that choice now has real financial impact. With multiple premium, mid tier, and open source models available, simply sending everything to the most powerful option is no longer sustainable, especially when you compare options using up to date frontier AI model pricing benchmarks.

This article walks through a practical approach to routing GPT and Gemini for commercial and transactional use cases. We will cover tiered model strategies, rules based versus judge LLM routers, minimal API implementations, and the KPIs you need to track to prove ROI. You will also see where open source models make more sense, and how enterprises are building portfolio based model stacks rather than betting on a single provider, a pattern echoed in modern multi‑LLM routing strategies.

Designing a Tiered Model Strategy for GPT, Gemini, and Open Source

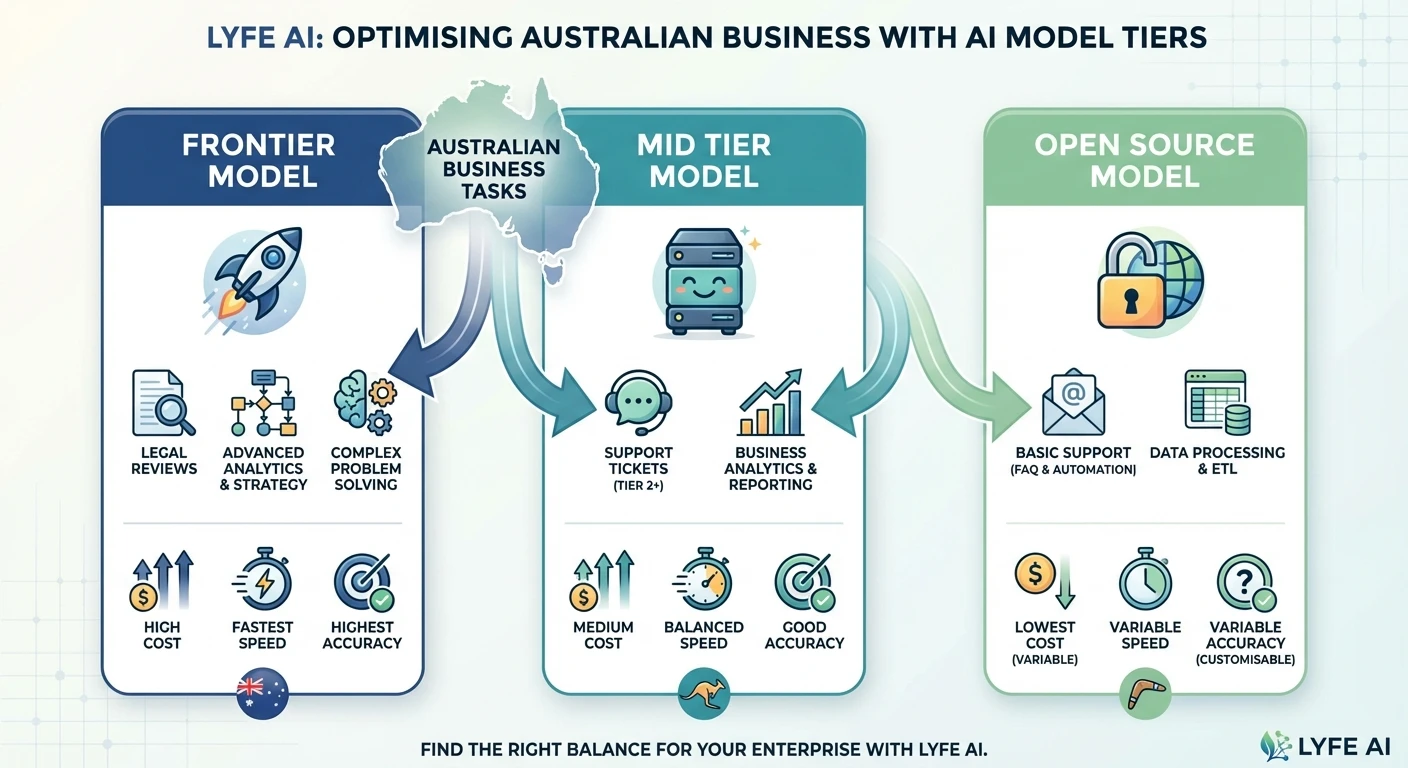

A robust routing strategy starts with a clear tiered model portfolio. One practical pattern uses three tiers. First are frontier or premium models like GPT‑5.2 (including GPT‑5.2 Pro), Claude Opus, and Gemini 3 Pro, which are designed for the hardest, highest‑value tasks—things like complex multi‑step legal analysis, sophisticated code generation, or high‑stakes customer decisions where accuracy really matters. These are your precision tools, not your everyday hammers, and guides like GPT‑5.2 vs Gemini 3 Pro comparisons help clarify where each belongs.

The second tier is made up of mid tier proprietary models, similar to Claude Haiku or Sonnet, or Gemini Flash like variants. These models handle moderately complex routine work, such as standard customer support, document drafting, and common analytics queries. They are cheaper and often fast enough while still maintaining strong quality for typical business workflows in AU teams, especially when paired with automation‑focused custom AI models tuned to your domain.

The third tier comprises fast and cheap mini models or open source systems like Llama or Mistral. These shine for narrow, repetitive tasks such as classification, spam detection, sentiment analysis, routing, and templated replies. When these models are fine tuned on your domain data, they can sometimes match or even beat frontier systems on that single task while costing 50 to 80 percent less. A well tuned router will aim to push 60 to 80 percent of overall volume into these mid and fast tiers, and only send 20 to 40 percent of queries to frontier models, where there is a clear quality or risk advantage—an allocation echoed in several multi‑model usage studies.

For Australian organisations, this mix aligns well with typical workloads. Think of an energy retailer using open source models for internal ticket triage, Gemini Flash for customer summaries, and GPT 5.2 Pro for regulatory or legal edge cases. The commercial value comes from consciously shaping that traffic distribution instead of letting every request default to the most expensive option, ideally under a managed portfolio such as Lyfe AI’s advisory and implementation services.

Rules Based vs Judge LLM Routing Strategies

Once you have your tiers, you need a way to decide which query goes where. The simplest approach is a rules based router. In this model, you define explicit logic such as if input_tokens is greater than 10k and the use case equals summary, send the request to Gemini 3 Pro, or if the use case equals complex_code, send it to GPT 5.2. You might also encode risk policies, for example, if customer_tier equals enterprise, do not allow the cheapest models. This style is transparent and easy to audit, making it ideal for small to mid sized Australian enterprises that need to show clear governance and align with formal AI usage terms and conditions.

The downside is that these routers do not adapt automatically as models change. If a new Gemini variant improves its coding performance, or GPT pricing changes, someone still has to update the rules by hand. Over time those if statements can become a tangle, especially when you support multiple products or markets.

Judge LLM or semantic routing takes a different path. Here, a small model acts as a classifier that inspects each request and assigns it to a category such as SIMPLE, COMPLEX, MULTIMODAL_HIGH, or MULTIMODAL_LOW. The classification might depend on factors like text length, domain, required accuracy, and whether the input includes images, audio, or video. Each class is then mapped to a particular model, for instance SIMPLE goes to Gemini Flash, COMPLEX to GPT 5.2, and MULTIMODAL_HIGH to Gemini 3 Pro. Platforms such as Langflow show this User Query to Judge Agent to Router to Specialised Agent pattern in visual canvases, and it can also be coded in Python or Node, much like in build‑your‑own GPT‑5 style routing examples.

In practice, many teams start with rules based routing and then introduce a judge agent for more nuanced decisions. It is entirely reasonable to combine the two, using rules for hard guardrails, like compliance constraints, and a judge model for softer performance choices. This hybrid pattern delivers more flexibility without giving up traceability, an important balance for regulated industries in AU that lean on secure, governed AI practices.

Minimal API Routing Across GPT 5.2 and Gemini

You do not need a huge platform rebuild to get started with routing GPT and Gemini. A minimal Python router can sit between your application and the AI providers, making decisions at the API layer. One common design uses simple heuristics to classify tasks based on text length, the presence of keywords like legal or financial, or patterns such as explain or what is that hint at general knowledge queries. These inputs help the router decide which model is most appropriate, and closely mirror the pragmatic approaches described in cost‑efficient LLM routing blueprints.

For example, you might send high risk or very general queries to GPT 5.2, or to GPT 5.2 Pro for the highest stakes. Long context tasks like summarising large reports, contracts, or multimodal content could default to Gemini 3 Pro, which handles extended inputs more comfortably. Short explanatory or FAQ style tasks can go to a cheaper Gemini Flash variant that returns answers quickly and keeps your per request cost low.

This minimal router centralises logic and collects valuable telemetry. Each call logs the chosen task type, selected model, token usage, and latency. Over time, that data becomes the foundation for tuning your routing rules, checking whether some tasks can safely move down a tier, and spotting cost creep before the finance team does. A sensible default is to fall back to GPT 5.2 if classification fails or an unexpected case appears, giving you safety while you iterate, particularly if you align model choices with a documented GPT‑5.2 cost and routing framework.

Because this approach lives at the API layer, it can be introduced gradually. An Australian SaaS startup might first wrap their support chatbot, measure cost and quality improvements, then extend the router to analytics tools and internal knowledge assistants. This stepwise rollout keeps risk low while you prove value in real production scenarios, and can be supported end‑to‑end by professional AI implementation services.

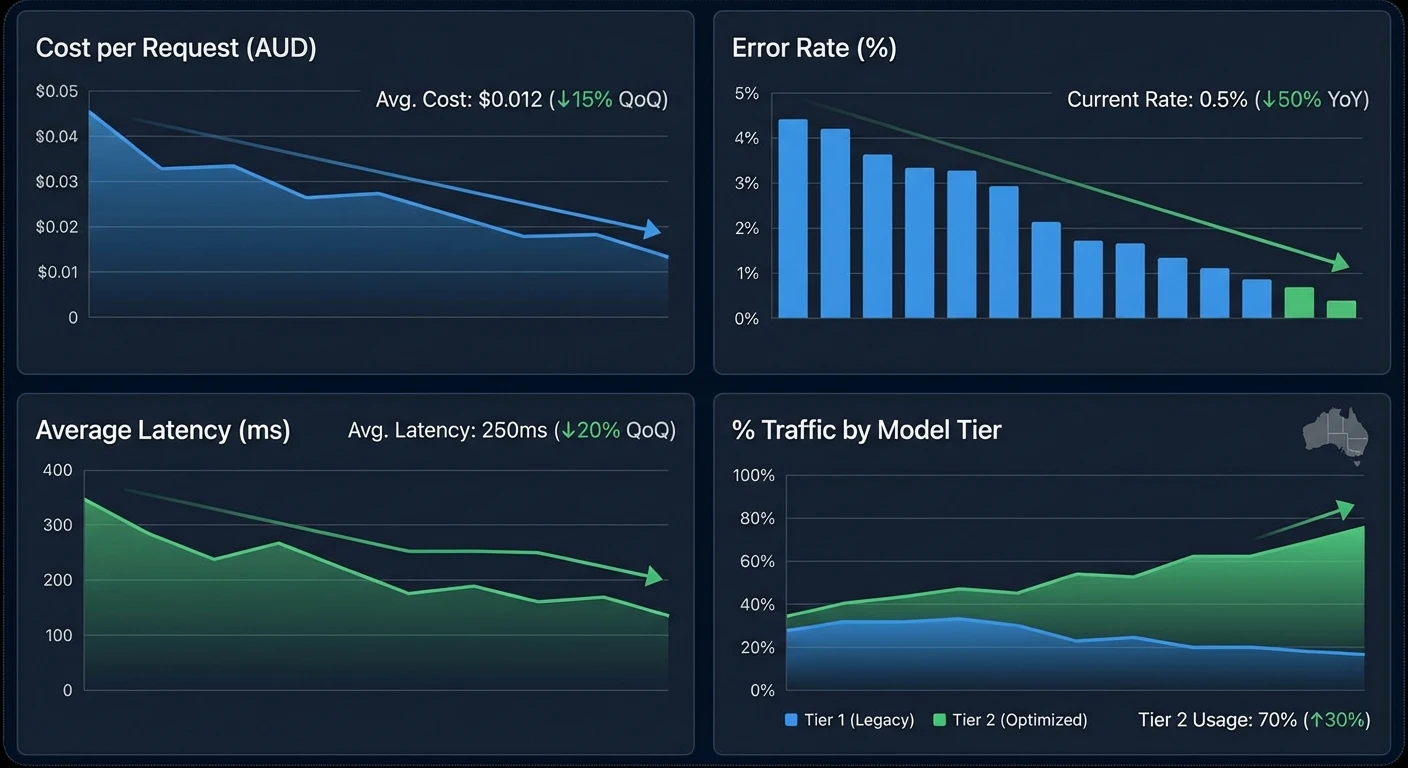

KPIs and Dashboards to Measure Routing ROI

To justify routing investments, you need solid metrics. A well designed dashboard will track cost per 1k tokens by route and model, such as GPT 5.2, Gemini, and any open source deployments. This lets you see not only the headline price differences but also which tasks are actually driving your spend. Average cost per request and total monthly AI cost should also be front and centre for finance teams, ideally mapped against reference benchmarks from multi‑provider pricing comparisons.

Quality signals matter just as much. Human rated scores for response usefulness, plus task metrics like bug resolution rate for engineering assistants, customer satisfaction and first contact resolution for support bots, or lead conversion for sales tools, show whether routing decisions are helping or hurting outcomes. Latency metrics such as p50 and p95 response times per model reveal whether cheaper routes are slowing users down.

Routing distribution is another key view. You want clear visibility into the percentage of calls and tokens going to each model, with a guardrail that frontier models remain under a set share, maybe below 30 percent of volume depending on your margins. Failure or escalation rates, where a query must be re run on a stronger model, highlight where you may have been too aggressive in sending work to cheaper tiers.

A straightforward return on investment formula compares what it would have cost to run all traffic on a single premium model against the actual routed cost. The resulting monthly savings percentage gives leadership a simple number to understand. To keep improving, you can run A B tests of routing strategies using traffic splits, perhaps 80 to 90 percent control and 10 to 20 percent experiment, tagging responses and analysing results in your usual BI tools—or even feeding those logs into intelligent multi‑model routing frameworks for automated optimisation.

When Open Source Models Beat GPT and Gemini on Cost

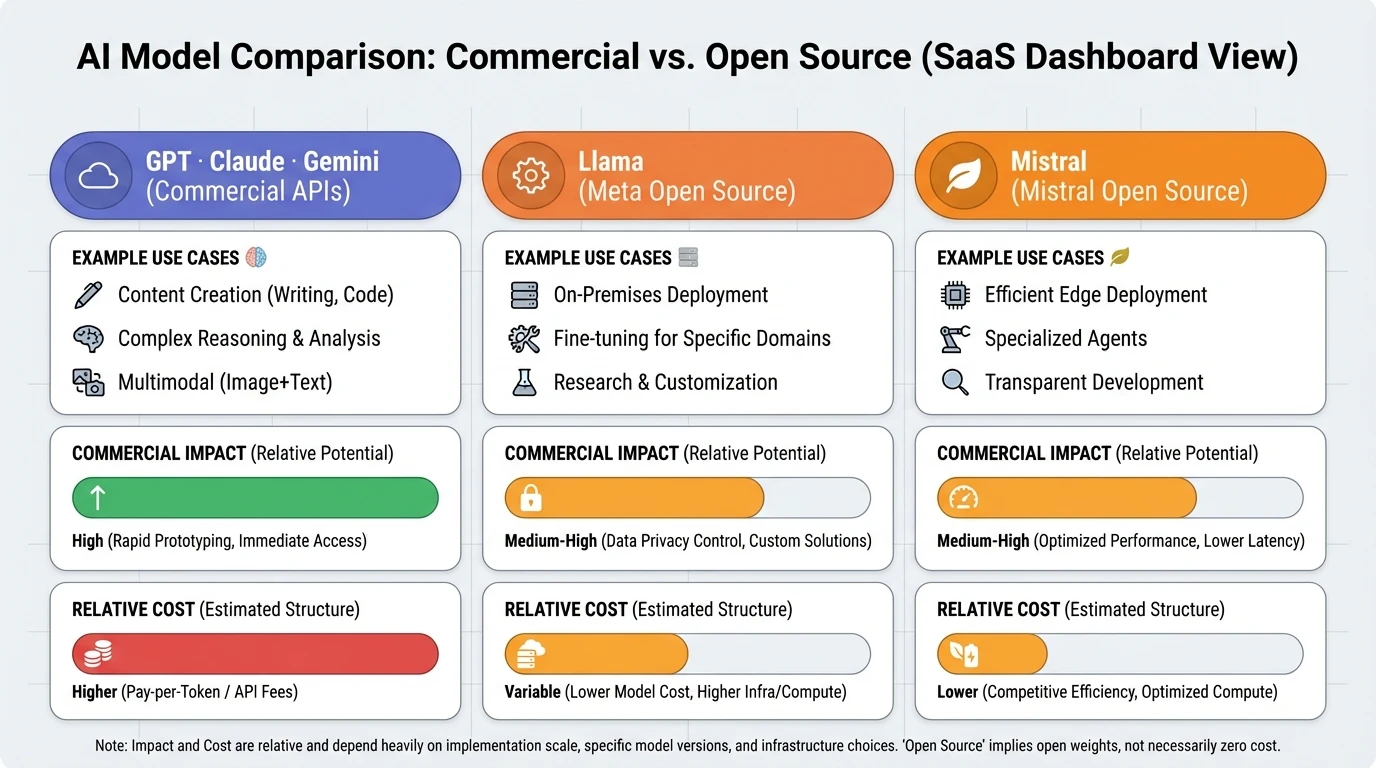

Routing is not just about shuffling traffic between proprietary models. In many cases, open source systems provide a better commercial outcome. Models such as Llama and Mistral can deliver very strong performance on narrow, repetitive tasks when fine tuned on your own data. Classification, spam filtering, sentiment analysis, and tagging are classic examples where the problem space is tight and predictable.

The economics become compelling when your volume is high or stable enough, usually over 50 to 100 million tokens per month for a single task, to justify the infrastructure. Once you have that scale, a finely tuned small model can match or even exceed a frontier system for that specific workload, while cutting costs by half or more. For internal tasks that never leave your network, self hosting also reduces data privacy concerns, which can be important in sectors like health or government in AU.

A sensible routing plan might send the bulk of repetitive internal tasks to these open source models running on local infrastructure, while keeping GPT 5.2 and Gemini reserved for complex, variable tasks that need broader knowledge and stronger reasoning. This approach often results in 60 percent or greater savings on those narrow workloads alone, and it frees up budget to invest in better user experiences or additional AI initiatives, something that bespoke automation solutions can amplify.

Of course, there are trade offs. However, some experts argue that those headline savings can evaporate once you factor in the real operational costs of running and maintaining local infrastructure at scale. Between GPU procurement, observability tooling, security hardening, and a constant stream of model updates, teams can find themselves trading API spend for DevOps overhead and architectural complexity. In heavily regulated environments, the compliance work required to keep on-prem models aligned with evolving policies can also offset much of the supposed efficiency gain. And if the open source stack underperforms on accuracy or reliability, the downstream costs of manual review, incident response, and user churn can quickly dwarf the initial savings. In other words, the right strategy isn’t just about cutting inference bills—it’s about rigorously modeling total cost of ownership and risk before assuming a 60 percent win. You take on more operational responsibility, including scaling, monitoring, and updating models. However, with modern deployment stacks and container orchestration, many tech savvy Australian startups already have most of the skills in house. For them, plugging fine tuned models into the router is a natural extension of current DevOps practice, particularly when guided by a secure Australian AI assistant partner that understands local constraints.

Productivity, Resilience, and Trade Offs vs Single Model Setups

Compared with sending every request to a single large model, multi model routing can deliver striking gains. Studies of similar architectures show productivity improvements in the 20 to 40 percent range, as teams match task difficulty with the right level of AI capability and speed. Developers ship features faster, support agents handle more tickets per hour, and analysts automate a bigger slice of their reporting work, patterns also highlighted in forward‑looking SMB AI model guides.

Routing also improves resilience. If one provider suffers an outage or regional slowdown, your router can temporarily shift traffic to another model, keeping core workflows alive. This is particularly important for Australian companies that may face latency challenges due to distance from some cloud regions. With a portfolio of providers, you can route more calls to models that are hosted closer to your users.

There are downsides. Every routing decision introduces some overhead, both in latency and in system complexity. However, careful engineering and caching can sharply reduce that cost. Reported cache hit rates around 92 percent show that many requests can be served without even touching a model, especially for repeated queries like standard help centre questions. Algorithms such as matrix factorisation in systems like RouteLLM demonstrate that it is possible to cut expensive GPT 4 style calls to roughly 14 to 26 percent of traffic while keeping quality stable, leading to 75 to 85 percent overall cost reductions—very much in line with the savings reported in multi‑model routing case studies.

The big picture is that routing gives you more levers to pull. Instead of debating whether a single model is good enough, you can tune combinations of models, caching, and retrieval to reach your preferred point on the cost performance curve. In a market as dynamic as AI, that flexibility is a competitive asset in itself, and forms a core design principle of enterprise‑grade AI delivery services.

Model Portfolio Roles and Typical Savings Patterns

In many enterprise setups, each model family takes on a distinct role in the portfolio. GPT 5.2 is often designated as the heavy hitter for complex, high stakes workloads such as detailed technical design, multi step reasoning, and tricky edge cases in customer conversations. It is the most capable but also the most expensive option, so routing policies treat it as a scarce resource, to be used when it clearly moves the needle, guided by detailed comparisons like OpenAI O‑series model analyses and similar.

Gemini, with its strong multimodal abilities and cost efficient inference, often becomes the default for tasks that mix text with images, audio, or other media. It is also a smart choice for medium complexity chat experiences where you want good reasoning without frontier level pricing. Open source models then fill in for non reasoning or light reasoning workloads, including search, tagging, and simple content generation, where they can outperform on cost while delivering acceptable quality.

A common pattern routes around 70 percent of simple queries to open source models, achieving cost savings of roughly 60 percent on that traffic. The remainder is split between Gemini for multimodal and mid complexity work and GPT for the hard stuff. Different routing configurations naturally yield different savings. Sending only the easiest tasks to small models might deliver 10 to 30 percent savings, while more advanced routing using platforms like RouteLLM or managed stacks like Bedrock can claim between 30 and 80 percent. Mixture of experts style routing, where multiple specialised models compete or collaborate, has reported around 43 percent savings, and policies that move 60 to 80 percent of volume to open source models can drive 60 to 80 percent cost reductions overall, according to several multi‑model evaluation reports.

For Australian businesses, the right balance depends on margin expectations, regulatory risk, and in house technical skills. But the numbers make one thing clear. Treating your models as a portfolio rather than a monolith is no longer a nice to have. It is a serious cost optimisation strategy, and one that can be systematically implemented with specialist AI portfolio management services.

Enterprise Adoption Patterns and Automation of Routing Decisions

Larger enterprises are already moving in this direction. Recent industry reports indicate that a growing share of larger enterprises now run multiple models in production, increasingly managing them as a portfolio tuned to specific use cases and risk profiles. Instead of manually wiring every decision, they are increasingly adopting dynamic model selection where platforms like Azure evaluate complexity and cost in real time when choosing which backend to call, mirroring the dynamic routing foundations behind many GPT‑5.2 vs Gemini deployment playbooks.

Some teams go further, using ensembles such as LLM Synergy that combine outputs from multiple models with weighted voting to boost accuracy by close to 19 percent. Others rely on experimentation methods like CHWBL that focus on reducing time to first token for end users, in some reports by up to 95 percent. These techniques sit on top of routing, using it as a base capability to orchestrate several models at once.

Data platforms such as Databricks add another layer by training routing agents on logs and human feedback. Over time, these agents learn which patterns of queries are best served by which models, continuously refining routing decisions with minimal manual intervention. For enterprises in AU, this automated learning loop fits neatly into existing data lake and analytics strategies, turning past interactions into better future decisions, much like the learning‑loop structures described in AWS multi‑LLM routing patterns.

The takeaway is simple. Routing is not a static exercise in drawing flowcharts. It is an evolving system that can leverage experimentation, ensembles, and learning from logs to keep improving. The sooner you start capturing the right signals, the more options you will have down the track—and the easier it becomes to plug in future models surfaced via resources like Lyfe AI’s continually updated AI insights.

Conclusion and Next Steps

Routing GPT and Gemini, alongside open source models, is fast becoming a core capability for any business that takes AI seriously. By structuring your models into clear tiers, mixing rules based and judge LLM routing, tracking the right KPIs, and selectively adopting open source, you can unlock substantial savings without sacrificing quality. You also gain resilience and flexibility, which matter when models and prices change every few months, as documented in many frontier pricing and capability reviews.

If your organisation in AU is still relying on a single model for every use case, now is the time to rethink that approach. Start small, perhaps with a minimal API router on one workflow, then expand as you see the benefits. The companies that learn to treat models as a managed portfolio will be the ones that turn AI from a cost centre into a durable advantage, especially when they partner with a secure Australian AI assistant provider that can guide model selection, routing design, and long term optimisation.

Frequently Asked Questions

What does routing GPT and Gemini actually mean for my business?

Routing GPT and Gemini means automatically deciding which AI model handles each request based on complexity, risk, and cost. Instead of sending everything to the most expensive frontier model, you use a mix of premium, mid‑tier, and open source models so high‑value tasks get top models and routine tasks use cheaper ones. This improves performance while controlling spend. For Australian businesses, this can materially change AI operating costs at scale.

How do I set up a tiered model strategy using GPT, Gemini and open source models?

Start by grouping your AI use cases into three buckets: high‑stakes/complex, routine/medium complexity, and narrow/repetitive tasks. Map frontier models (like GPT‑5.2 Pro or Gemini 3 Pro) to the first bucket, mid‑tier models (Claude Sonnet/Haiku, Gemini Flash variants) to the second, and mini or open source models (Llama, Mistral) to the third. Then define routing rules or a router model that sends each request to the right tier based on task type and risk. LYFE AI can help design this portfolio and implement routing logic in your stack.

When should I pay for GPT‑5.2 or Gemini 3 Pro instead of using cheaper models?

Use GPT‑5.2 or Gemini 3 Pro for high‑value, high‑risk work where errors are expensive—complex legal or financial reasoning, advanced code generation, and high‑stakes customer decisions. They are also best when tasks require nuanced multi‑step thinking or synthesis across large document sets. For routine support, drafting, or simple analytics, mid‑tier or tuned open source models are usually good enough and far cheaper. A routing strategy ensures only the small percentage of truly critical calls hit premium models.

How can I reduce my generative AI costs without hurting quality?

The most effective way is to introduce routing and a tiered model strategy rather than relying on a single frontier model. Push narrow, repetitive tasks like classification, spam detection, and templated replies to fine‑tuned open source or mini models, and use mid‑tier models for standard business workflows. Reserve GPT‑5.2 or Gemini 3 Pro for complex, high‑value cases only. LYFE AI uses model pricing benchmarks and KPI tracking to tune this balance so you see measurable cost savings with minimal quality loss.

What is the difference between rules based routing and a judge LLM router?

Rules based routing uses clear if‑then logic (for example, route all tickets with amount > $10k to a frontier model) based on metadata and task type. A judge LLM router, on the other hand, uses a small model to read the prompt or context and decide which downstream model is best for this specific request. Rules are simpler, cheaper, and more transparent; judge routers are more flexible and can adapt to edge cases but add some latency and complexity. Many enterprises use a hybrid: hard rules for obvious cases and a judge LLM for ambiguous ones.

How do I decide between GPT‑5.2 vs Gemini 3 Pro for my commercial use cases?

Compare them on your actual workloads across four dimensions: reasoning quality, latency, integration fit with your stack, and effective cost per successful output. Some teams find GPT‑5.2 stronger on code and complex reasoning, while Gemini 3 Pro can be compelling on multimodal and Google‑adjacent workflows, but the differences are use‑case specific. Run A/B tests on a sample of your real prompts and measure task success, not just raw token price. LYFE AI runs these evaluations and advises which model or mix is best for your environment.

How do enterprises track ROI and KPIs for multi‑LLM routing strategies?

Typical KPIs include cost per 1,000 successful tasks, first‑pass resolution rate, average handle time, and quality scores from human review or automated evaluation. Companies also monitor premium model usage percentage, latency per tier, and error or escalation rates when cheaper models are used. By comparing these metrics before and after routing, you can quantify savings and quality changes. LYFE AI sets up this KPI framework and reporting so finance and product teams can see clear ROI.

Can open source models like Llama or Mistral really replace frontier models in production?

For narrow, well‑defined tasks—classification, sentiment analysis, routing, FAQ replies—fine‑tuned open source models often match or even beat frontier models at a fraction of the cost. They are less suited, out of the box, to very broad or highly complex reasoning tasks, but shine when you have good domain data and a clear objective. Many enterprises now run open source models for 60–80% of traffic and reserve GPT or Gemini for the remaining critical cases. LYFE AI helps with fine‑tuning, deployment, and safe integration of these models.

What is a minimal viable API implementation for routing GPT and Gemini in my app?

A minimal setup usually includes a single routing service that receives user requests, applies routing rules or calls a judge model, and then forwards the request to the chosen GPT, Gemini, or open source endpoint. It tags each call with metadata (model, cost estimate, latency, outcome) and logs the response for evaluation. You don’t need a complex orchestration layer to start—just a lightweight router, a few clear rules, and monitoring. LYFE AI can implement this pattern inside your existing backend or as a standalone service.

How can LYFE AI help my Australian business implement GPT and Gemini routing?

LYFE AI designs a tailored tiered model strategy based on your use cases, risk profile, and budget, using current frontier AI pricing benchmarks. We then implement routing (rules based, judge LLM, or hybrid), integrate GPT, Gemini, and open source models into your stack, and set up KPIs and dashboards to track performance and ROI. Our team can also fine‑tune domain‑specific models to offload work from premium providers. This gives you a commercially optimised, multi‑LLM portfolio instead of a single‑vendor dependency.