Introduction: Why Advanced Deep Research matters now

Table of Contents

- Introduction: Why Advanced Deep Research matters now

- Foundations: AI-first search, Deep Research, and hallucinations

- Under the hood: Perplexity’s AI-first Search API engine

- What makes Perplexity’s Deep Research “Advanced” in practice

- Cost, latency, and accuracy trade-offs for teams in Australia

- Australian use cases and regulated industries for Deep Research

- Practical steps to adopt Advanced Deep Research in your stack

- Conclusion and next steps

If you have been testing AI tools for serious work, you have probably hit the same wall: generic answers, dated information, and the occasional confident fabrication. This is exactly the problem Perplexity’s new Advanced Deep Research tier is designed to attack, using an AI-first search stack rather than a simple chatbot wrapped in a browser.

At LYFE AI, we are seeing a shift across Australian teams – from “fun demos” to production systems that must be accurate, cited, and auditable. Perplexity Advanced Deep Research sits in the middle of this shift. It combines a web-scale search API, span-level context selection, and multi-step reasoning to deliver long-form reports that are grounded in current sources instead of static model training data.

In this article, we will unpack the foundations of AI-first search, explain how Perplexity’s Search API powers Advanced Deep Research, explore its cost-latency-accuracy trade-offs, and walk through Australian scenarios where it shines. By the end, you will have a clear mental model for when – and how – to use Advanced Deep Research in your own AI workflows.

Foundations: AI-first search, Deep Research, and hallucinations

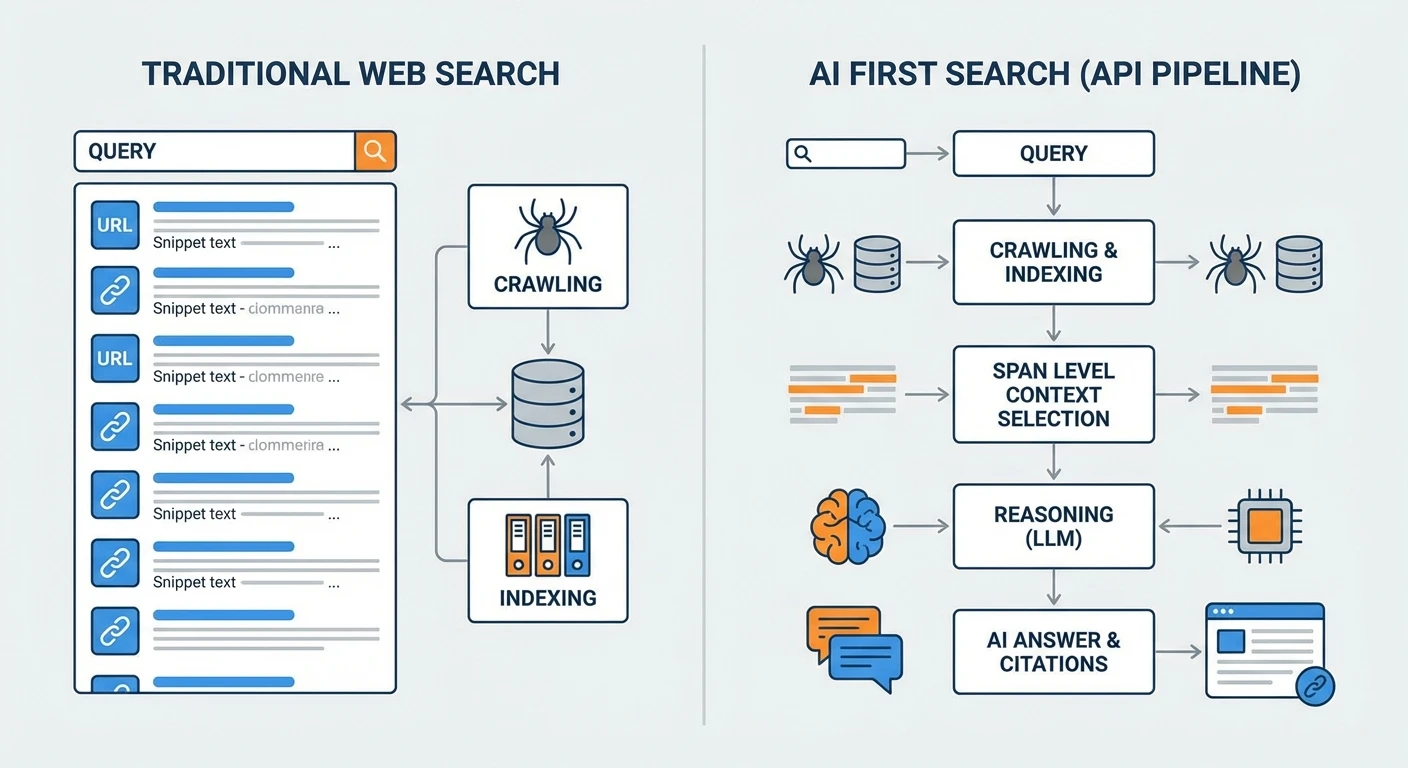

To understand what Perplexity Advanced Deep Research actually is, you first need a clean definition of AI-first search. Traditional web search was built for humans: return a list of links, let people click, skim, and decide what matters. AI-first search flips this. It is a search API built specifically for large language models and agents. It crawls and indexes the web, ranks results, and then packages only the most useful context in a format a model can consume and reason over.

Deep Research then builds on that AI-first foundation. Instead of running a single search and stuffing a few snippets into a prompt, a Deep Research system breaks your request into sub-questions, runs multiple rounds of search and reading, and then synthesises a structured answer. It chooses relevant sections or even tight spans of text from documents, checks them against each other, and exposes citations so you can see where claims came from.

Perplexity’s Advanced Deep Research tier is essentially a beefed-up version of this pattern, layered on top of its search API. The Sonar Deep Research model runs exhaustive web queries across hundreds of sources and then writes full reports. On fresh topics, this approach appears to cut hallucination rates to around 10 percent or lower in independent benchmarks, versus roughly 25–40 percent for standalone LLMs without web search, and roughly 17–33 percent for current retrieval-augmented tools in domains like law. For Australian teams dealing with fast-moving regulation or market data, that difference is not academic; it is the gap between trusted automation and risky guesswork.

Web search integration is no longer a “nice to have” for AI assistants. Without live retrieval, responses skew stale, miss local nuance, and lack an audit trail. With AI-first search and Deep Research, your assistant can ground every major claim in linked sources, giving both your users and your compliance teams something solid to stand on. If you are also considering secure, onshore assistants for day-to-day tasks, pairing Perplexity with a secure Australian AI assistant can keep sensitive workflows inside local infrastructure.

https://perplexity.ai | https://arxiv.org | https://infoq.com

Under the hood: Perplexity’s AI-first Search API engine

Perplexity Advanced Deep Research is only possible because of the architecture of the underlying Search API. Instead of bolting a model on top of a legacy search index, Perplexity has built a stack explicitly tuned for AI workloads. That starts with hybrid retrieval – blending classical keyword matching with semantic search – to find both exact terms and conceptually related content in the same query.

Retrieval runs through multi-stage ranking pipelines. A fast, broad recall step pulls back many candidates in under 100-200 milliseconds, then slower but smarter rerankers sort those candidates based on relevance to the user’s question. This layered approach is a proven way to support massive query volumes while keeping latency inside the tight budget needed for multi-step Deep Research, a pattern echoed in Perplexity’s own architecture notes..

A distinctive piece of the design is Perplexity’s “content understanding” module. Instead of treating each page as a single blob, the crawler parses content into coherent sections and spans. Those spans become first-class retrieval units. For Deep Research, that means the model can pull a specific paragraph from deep inside a long PDF about an ASIC ruling, without dragging in three pages of unrelated material. It is a quiet but powerful form of context engineering.

On the evaluation side, Perplexity has open-sourced a framework called search_evals, tailored to test relevance and latency for AI-first search scenarios. It lets teams benchmark not just basic precision, but also performance under multi-step workloads. For builders in Australia who have to justify vendor choices, that kind of transparent evaluation tooling is an encouraging sign that search quality is being treated as a measurable, improvable system rather than marketing fluff.

https://github.com/perplexity-ai/search_evals | https://infoq.com/articles/api-latency

What makes Perplexity’s Deep Research “Advanced” in practice

Perplexity’s public docs tend to talk more about the Search API than the product tiers, so “Advanced Deep Research” can sound like a vague label at first glance. Underneath, however, there are some clear technical and user-facing differences that earn the name. The most obvious is depth. Advanced Deep Research runs more iterative search-read-requery cycles than the basic experience, particularly on broad or multi-part prompts.

Instead of scanning a handful of pages, it may fan out across hundreds of sources, cluster them by topic, and then dive into the most promising groups. During this process, span-level indexing becomes important. Because the system can select fine-grained snippets, it can both reduce duplication and cross-check claims across multiple documents. That cross-document reasoning – comparing how different regulators, news outlets, and research papers describe the same issue – is what lets the model reconcile contradictions instead of blindly averaging them.

The output is not just a longer blob of text. Reports from Sonar Deep Research are structured, with sections, bullet points, and inline citations that tie back to specific paragraphs. For complex queries, independent evaluations show it outperforms models like Gemini Thinking and o3-mini on both accuracy and depth. It is slower than a quick chat reply, of course, but that is the trade: Perplexity is explicitly optimising for well-cited, expert-level responses rather than instant, shallow summaries, as reflected in reviews comparing Perplexity Pro and Max tiers.

From a user’s point of view, “Advanced” translates into three things: more comprehensive coverage for broad topics, more resilient behaviour on ambiguous questions, and a noticeable drop in hallucinated details. When the system cannot find strong support for a claim, it is more likely to hedge, explain uncertainty, or highlight gaps in the public record. That kind of transparent behaviour matters if you are going to put the tool in front of clients or staff who are not AI experts.

https://perplexity.ai | https://openai.com/blog/evaluating-long-form-responses

Cost, latency, and accuracy trade-offs for teams in Australia

Every deep research workflow lives on a triangle of cost, latency, and accuracy. You can push strongly on two corners, but you will always feel pressure on the third. Legacy search APIs usually optimised for cost and latency. They were cheap per query and returned results in under one second, but offered only raw links and short snippets, leaving the heavy lifting – reasoning, synthesis, and hallucination control – to your own stack.

AI-first search and Advanced Deep Research flip that equation. Perplexity’s current pricing for Sonar Deep Research is based on search queries at about 5 US dollars per 1,000 requests, with token charges in the ballpark of 2 dollars per million input tokens, 8 dollars per million output tokens, plus around 2 dollars per million citation tokens and 3 dollars per million reasoning tokens processed. On paper that looks more expensive than traditional APIs at 0.005-0.02 dollars per query. In production, however, the conversation shifts from raw API fees to error costs and human time. If Deep Research cuts hallucinations by up to 70 percent and saves hours of manual cross-checking, many teams see a 5-10x ROI even with higher per-query charges, a pattern echoed in several independent value-for-money reviews.

Latency is where expectations need to be reset. A conversational assistant without search might respond “instantly” but can miss recent changes in ATO rulings or ASIC guidance by months or years. Traditional search APIs stay under a second but do not write the report for you. Perplexity positions its Advanced Deep Research reports in the 2-4 minute window for complex tasks. That sounds slow compared with chat, yet for a 10-page market brief or a close read of a 100-page regulation, four minutes is often a bargain.

For Australian users, network geography adds another wrinkle. Latency budgets depend on where the vendor’s points of presence sit relative to major hubs like Sydney and Melbourne. Architectures that use asynchronous fan-out, layered caching, and circuit breakers tend to keep p95 and p99 response times within an acceptable envelope even from AU. When you are evaluating vendors, it is worth explicitly asking for regional latency numbers, not just global averages, so you can model how Deep Research fits into your user experience.

https://perplexity.ai | https://cloud.google.com/blog/products/networking/understanding-latency

Australian use cases and regulated industries for Deep Research

The Australian context is where the strengths of Perplexity Advanced Deep Research become very real. Consider a small Sydney-based business trying to work out whether it qualifies for a federal grant. The relevant rules might be spread across a primary .gov.au landing page, several PDF guidelines, and a handful of media releases. A basic chatbot will usually hallucinate or oversimplify. An AI-first Deep Research workflow can instead prioritise Australian government domains, pull exact paragraphs from the PDFs, and then explain the conditions in plain English with links back to the source pages.

In finance, an enterprise knowledge assistant might need to answer client questions using up-to-date material from ASIC, APRA, the RBA, and the ATO, plus each bank’s own product terms. Manual research can take staff half an hour or more per query. With Deep Research, the assistant can run targeted searches over regulator sites and internal documents, reconcile differences, and produce a draft response with citations in a few minutes. Compliance teams can then review the exact spans that support each recommendation, or run a parallel check through a professional Australian AI service partner.

Public sector and healthcare scenarios have similar patterns. A citizen-facing assistant could explain planning rules, licensing requirements, or concession schemes by surfacing specific clauses from long council or state legislation PDFs. A clinical research assistant might summarise emerging literature about a therapy, drawing primarily from Australian institutions but cross-checking against global sources. In each case, span-level retrieval lets the model quote the precise section that matters, not just a generic overview.

Adoption signals from Australian universities suggest that local researchers are already using Perplexity for literature reviews and synthesis tasks, helped by education discounts. While detailed AU market share numbers are still thin, the direction of travel is clear: sectors where policy, law, and science move quickly – and where hallucinations are risky – are leaning toward web-grounded, citation-heavy tools like Advanced Deep Research rather than bare chatbots.

https://perplexity.ai | https://asic.gov.au | https://ato.gov.au

Practical steps to adopt Advanced Deep Research in your stack

If you are considering Perplexity Advanced Deep Research for your organisation, it helps to start with a concrete workflow rather than a vague goal like “make research better”. Pick a use case where the pain is clear – for example, grant discovery for SMEs, competitor analysis for a marketing team, or regulatory Q&A for a bank. Map the current process: who gathers sources, how long synthesis takes, and where errors slip through. That baseline will later make ROI much easier to quantify.

On the technical side, integration follows a simple REST pattern. You send a POST request to the Perplexity chat completions endpoint, set the model field to sonar-deep-research, and include the user’s prompt in the messages array. For higher-volume workloads, you will want to add retries around rate limits and consider batching where queries share background context. Because Deep Research supports long prompts within a roughly 128k-token total context window (including input, history, search results, and output), you can also attach local documents – like internal policies or client contracts – via files or long text input alongside web search, or feed in outputs from your own custom Australian AI automations, as long as everything fits within that limit..

Plan tiers matter too. In 2026, Perplexity’s Pro plan is tuned for small teams, with collaboration for up to 5 users and around 20 Deep Research queries per day, while Max offers much higher throughput with unlimited research queries, unlimited Labs for workflows like dashboards and recurring reports, and priority access to new features. For many Australian startups, Pro is a sensible starting point. As usage grows, shifting heavy or repetitive workloads into scheduled Deep Research runs, triggered by your own backend, can help smooth out costs and keep staff workflows predictable. If you need help deciding where Perplexity fits alongside models like GPT‑5.2 or Gemini, resources such as our guides on GPT‑5.2 vs Gemini 3 Pro and GPT‑5.2 Instant vs Thinking costs can be a useful cross-check.

Finally, design the user experience around the depth-speed trade-off. Use fast, lightweight chat with minimal search for trivial questions or navigation. Reserve Advanced Deep Research for tasks where a two-to-four-minute, expert-level report is genuinely valuable. Make that distinction visible in your UI – separate buttons, progress indicators, and clear expectations – so users understand what they are getting and why it takes longer. When you get this right, Deep Research feels less like “waiting for a slow chatbot” and more like having a focused analyst working in the background. Teams that do this well often combine Perplexity with a broader AI services layer that handles routing, governance, and monitoring.

https://perplexity.ai/docs | https://developer.mozilla.org/en-US/docs/Web/HTTP/Methods/POST

Conclusion and next steps

Perplexity’s Advanced Deep Research marks a real shift in how AI systems handle the web. Rather than gluing a model onto a human-centric search engine, it uses an AI-first stack – hybrid retrieval, span-level context, and multi-stage ranking – to feed a reasoning engine that is explicitly optimised for long-form, well-cited answers. For Australian teams facing complex regulation, fast-moving markets, and limited research capacity, that combination can turn messy information flows into something structured and dependable.

Moving from curiosity to production, though, means thinking carefully about cost, latency, and accuracy, and matching Deep Research workflows to the right problems. If you are exploring how to bring tools like Perplexity into your own products or internal systems, now is the time to prototype on a focused use case and measure the impact. LYFE AI works with AU organisations to design, test, and deploy AI-first search and research workflows; if you would like help evaluating Advanced Deep Research or shaping a pilot, reach out to our team and we can walk through the options together, including professional implementation services and clear commercial terms.

https://perplexity.ai | https://lyfe.ai | https://dev2.smugsecurity.com.au/post-sitemap.xml | OpenAI O4-mini vs O3-mini

Frequently Asked Questions

What is Perplexity Advanced Deep Research and how is it different from normal Perplexity search?

Perplexity Advanced Deep Research is a premium mode that uses Perplexity’s AI‑first Search API to generate longer, citation‑rich research reports instead of short, single‑shot answers. Unlike normal search, it runs multi‑step queries, selects only the most relevant spans of web pages, and reasons over them to reduce hallucinations and keep information current.

How does AI-first search work in Perplexity Advanced Deep Research?

AI‑first search is a search stack designed for language models rather than humans skimming links. Perplexity crawls and indexes the web, ranks results, then passes tightly scoped text chunks (spans) into the model so it can reason over high‑quality context instead of the entire page, which improves accuracy and reduces noise.

How accurate is Perplexity Advanced Deep Research compared to ChatGPT or normal chatbots?

Advanced Deep Research is typically more accurate on web‑based questions because it actively searches the live web and cites its sources, instead of relying mostly on static training data. Its span‑level context selection and multi‑step reasoning are specifically built to reduce hallucinations, though users should still click through citations for critical decisions.

When should I use Perplexity Advanced Deep Research instead of Google Search?

Use Advanced Deep Research when you need a synthesized, referenced overview rather than a list of links to manually read. It works best for market scans, policy or regulation summaries, technical overviews, and competitive research where you want a structured report with citations that you can quickly validate, instead of doing all the reading yourself in Google.

Can Australian businesses safely rely on Perplexity Advanced Deep Research for professional work?

Australian teams can use Advanced Deep Research for faster first‑pass research, trend analysis, and briefing notes, provided they review the cited sources before publication. LYFE AI generally recommends treating it as a high‑quality research assistant that accelerates work, not as an unquestioned source of legal, financial, or clinical advice.

How can I integrate Perplexity Deep Research into my existing AI workflows?

You can treat Advanced Deep Research as the research layer that feeds downstream AI tools or agents. For example, teams working with LYFE AI often use Perplexity to generate a cited research pack, then pass that content into other models (or internal tools) for drafting reports, slide decks, or product documentation while preserving the source links for auditability.

What are the main trade-offs of using Perplexity Advanced Deep Research (cost, speed, and depth)?

Advanced Deep Research is slower and more resource‑intensive than a simple chat query because it runs multiple searches and reasoning steps, but the output is deeper and better sourced. You trade a bit of latency and per‑query cost for longer, more reliable reports that can replace hours of manual reading in many use cases.

How does Perplexity Advanced Deep Research reduce hallucinations in its answers?

It reduces hallucinations by grounding responses in specific, retrieved web spans and forcing the model to reason primarily over that context. The system surfaces inline citations for key claims, and if no good sources are found, it is more likely to say it cannot answer confidently rather than invent information.

What are some practical use cases of Perplexity Advanced Deep Research for Australian teams?

Common use cases include scanning Australian legislation and regulatory updates, mapping local competitors, summarising sector‑specific news, and building research packs for internal strategy or client proposals. LYFE AI often helps teams design repeatable Deep Research workflows for tasks like weekly market intelligence or pre‑sales discovery.

How can LYFE AI help my organisation get started with Perplexity Advanced Deep Research?

LYFE AI works with Australian organisations to choose the right AI‑first search tools, design research workflows, and train staff to use Deep Research safely and effectively. They can also help you integrate Perplexity into existing systems, set governance rules around citation checking, and measure time‑savings versus your current manual research process.