Introduction: why Claude Opus 4.6 forces a new conversation

Table of Contents

- Introduction: why Claude Opus 4.6 forces a new conversation

- Claude evolution timeline and the role of Opus 4.6

- Benchmarks and MRCR v2: reading the signal behind the score

- Pricing, 1M-token context and the economic positioning of Opus 4.6

- Claude Opus 4.6 in the frontier model landscape

- Enterprise adoption, revenue traction and what it implies for risk

- Limitations, evidence gaps and pacing your investment

- Practical next steps for Australian executives considering Opus 4.6

- Conclusion: deciding if Claude Opus 4.6 is your next work engine

Claude Opus 4.6 is not just another model release; it is Anthropic’s first fully explicit “work” system built to sit inside real enterprises. It aims at coding, agents and complex workflows rather than casual chat, and early data suggests it now leads on several commercially important benchmarks compared with rival frontier systems across the current generation of AI models.

For boards and executives, the question is no longer “should we test generative AI?” but “which model underpins our next five years of automation and analysis?” Opus 4.6 raises that decision to a new level: a 1M-token context window (currently in beta and initially available via the API), major jumps in multi-step reasoning, and strong signs of traction with global enterprises. documented in Anthropic’s launch materials. This article (Part 1 of 3) looks at Claude Opus 4.6 from an executive angle – how it changes the economics of AI-powered work, what the early benchmarks really say, and how to read the market signals before you commit budget and reputation to any single platform.

Claude evolution timeline and the role of Opus 4.6

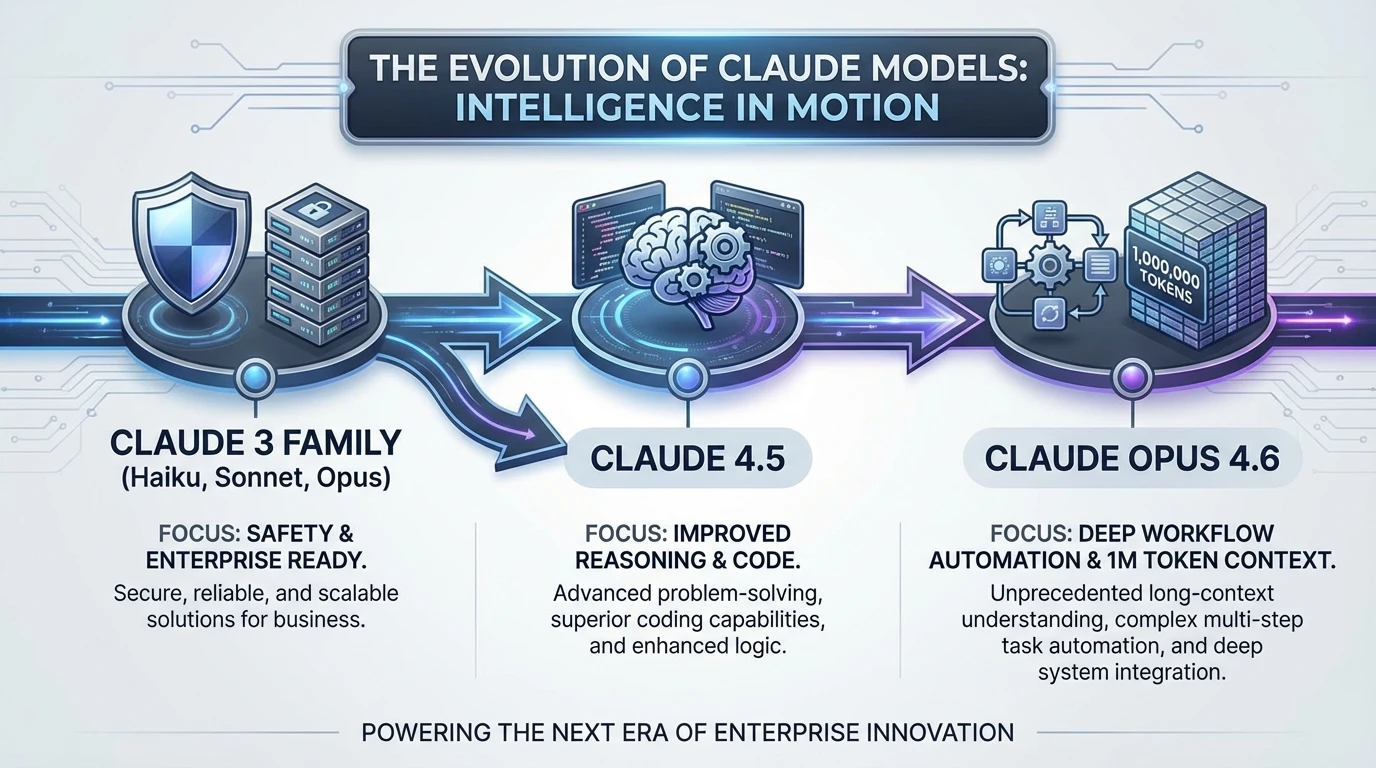

To understand Claude Opus 4.6, it helps to see it as part of a fast-moving line rather than an isolated upgrade. Early 2024 through mid-2025 was dominated by the Claude 3 family – Haiku, Sonnet and Opus – which built Anthropic’s reputation for safety, calm behaviour and enterprise focus. These models were widely tested in support, summarisation and light coding tasks across global organisations as reflected in Anthropic’s model history.

In late 2025, the 4.5 generation arrived. Claude Opus 4.5, along with Sonnet 4.5 and Haiku 4.5, delivered a noticeable jump in reasoning quality and code handling. Many teams in software, legal and finance shifted their day-to-day work to these models, but they still had clear limits on very long documents, deeply nested research tasks and sustained planning across many steps.

February 2026 marked the launch of Claude Opus 4.6. This is the first Opus-class model with a 1M-token context window in beta, allowing it to keep track of hundreds of pages of material or very large codebases in a single session. It also ships with new agent-like behaviour: stronger planning, the ability to stay on multi-stage tasks, and better self-debugging of its own work over long horizons. At the same time, it remains Anthropic’s most advanced model, now positioned against the latest frontier systems from other providers, not just as another chat assistant but as a central work engine for serious organisations in what some analysts call a new “vibe working” era of AI.

https://www.anthropic.com/news/claude-opus-4-6

Benchmarks and MRCR v2: reading the signal behind the score

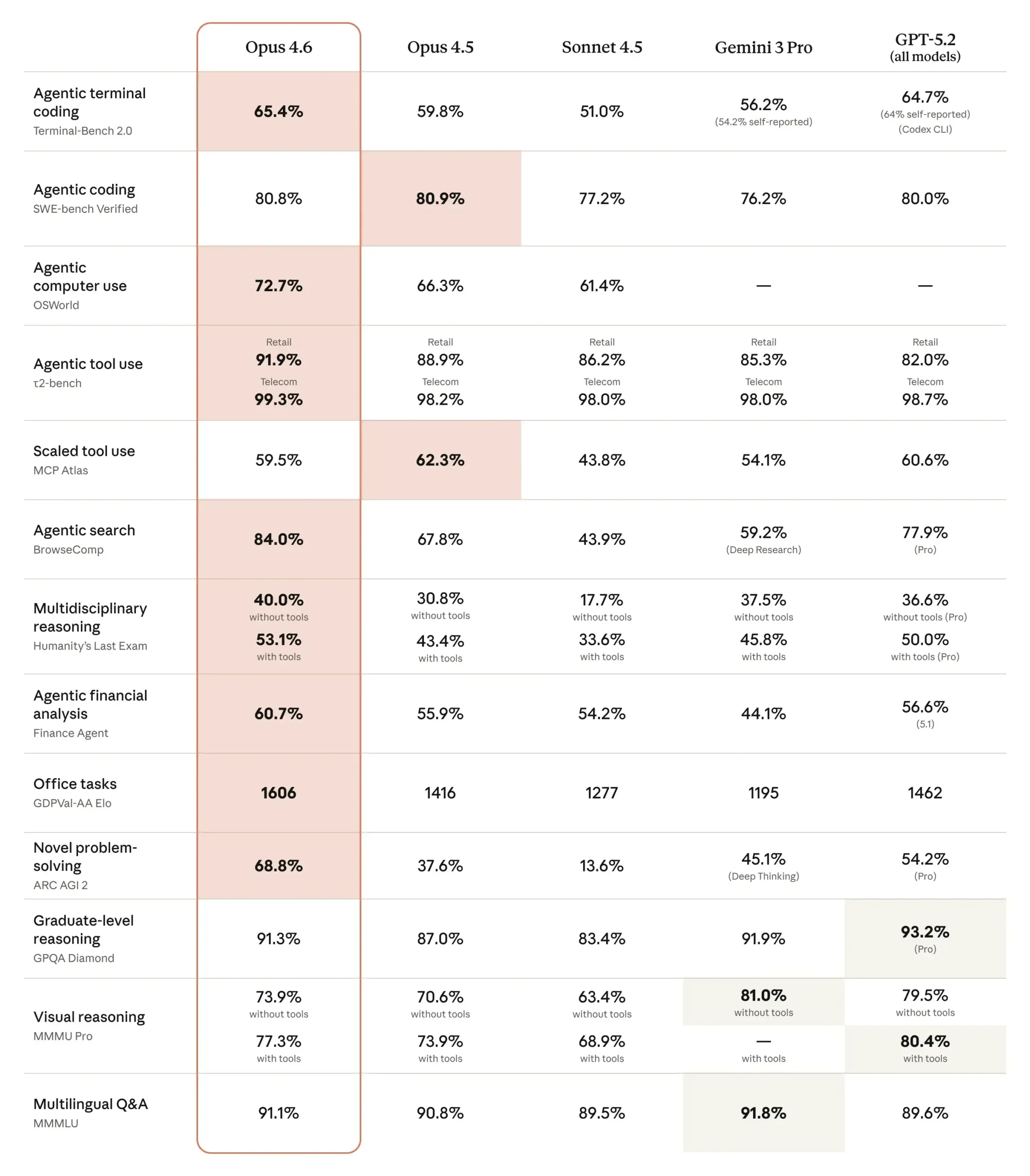

A major claim around Claude Opus 4.6 is its lift in complex reasoning, particularly on tasks that combine long documents, retrieval and multi-step logic. Reported results highlight the MRCR v2 benchmark, where performance rises from 18.5 percent with Sonnet 4.5 to 76 percent with Opus 4.6. On the surface, that is a huge jump; underneath, it points to something more important for executives: reliability on messy, multi-source work that looks like real jobs, not toy examples according to Anthropic’s published benchmark summaries.

MRCR v2 focuses on multi-retrieval complex reasoning – the system must pull relevant pieces from different locations and stitch them into a correct answer. That is close to what your teams do when they review regulatory guidance, internal policies and market data at the same time. Moving from under one-fifth correct to roughly three quarters suggests the model can now hold and manipulate far richer context without losing the thread.

Early user testing reinforces this pattern. On multi-source legal and financial analysis tasks, evaluators report around a 10 percentage point gain, from 58 percent to 68 percent accuracy. People also describe Opus 4.6 as “thinking longer” and being more dependable on unfamiliar codebases and multi-step debugging. For a CIO or CFO, this matters less as a bragging right and more as an input into risk calculations: if the tool is significantly more dependable on complex workloads, you can assign it higher-value tasks with less human rework a theme echoed in enterprise-focused commentary.

It is also worth noting that internal and third-party tests now place Opus 4.6 ahead of OpenAI’s GPT-5.2 on several economically important workloads. This does not mean it dominates everywhere, but it indicates a turning point: for some categories of work, especially structured reasoning and analysis, Anthropic’s model is arguably in front, at least for now with financial markets taking notice of its impact on software firms.

https://www.anthropic.com/news/claude-opus-4-6-benchmarks

Pricing, 1M-token context and the economic positioning of Opus 4.6

Pricing is where Claude Opus 4.6 signals its intent as a serious enterprise tool rather than a low-cost commodity. Globally, the list price is reported at around USD $5 per million input tokens and $25 per million output tokens, with premium rates once you move into very large context usage above roughly 200,000 tokens. Interestingly, these figures are consistent with the prior generation rather than a sudden price hike, even though the capabilities have moved forward.

For boards and finance leaders, the point is not the headline rate; it is how that cost interacts with the new 1M-token context and improved reasoning. A larger window means you can load in many documents, entire transaction histories or a substantial codebase in a single go, instead of breaking them into fragments and orchestrating many separate calls. That can cut orchestration complexity and reduce the number of human hours devoted to prompt engineering and stitching outputs together.

There is, however, a trade-off. Long-context usage is more expensive, and it is easy for enthusiastic teams to overspend by throwing entire repositories at the model when a smaller slice would do. The economic case for Opus 4.6 therefore rests on whether its higher reliability on complex tasks offsets the token costs through reduced human labour, fewer errors and faster project delivery. Early enterprise revenue signals from Anthropic’s ecosystem, along with heavily publicised but disputed reports that Claude Code may have reached hundreds of millions of dollars in run-rate within six months, suggest that many organisations believe the payoff is substantial enough to justify ongoing spend, even if the precise figures remain controversial.

https://www.anthropic.com/pricing

Claude Opus 4.6 in the frontier model landscape

Any C-suite team weighing Opus 4.6 needs to place it against other frontier systems from major vendors. According to Anthropic’s own and third-party results, Opus 4.6 now edges ahead of OpenAI’s latest GPT‑5.2 and competing Gemini 3 Pro offerings on several workloads that matter to enterprises, such as coding, structured reasoning and certain analysis tasks. It also competes with models in the Gemini 3 line, which emphasise multimodal and consumer use cases alongside productivity scenarios.

Where Opus 4.6 appears strongest is in treating AI as a dependable collaborator for work that is text and code heavy, especially when that work spans long documents or complicated logic chains. The combination of 1M-token context, improved multi-retrieval performance and reinforced planning means it can operate almost like an analyst or senior engineer that can see the whole file cabinet at once. This is different from models optimised primarily for image, audio or broad consumer interactions, even if they perform well at text and aligns with how marketing and business teams are beginning to deploy it.

At the same time, gaps remain. Public information still offers limited head-to-head latency comparisons, and there are areas such as multimodal creativity where rivals may still lead. Independent third-party evaluations are emerging but not yet exhaustive, so boards should treat vendor benchmarks as directional rather than definitive. The practical takeaway is that Opus 4.6 deserves a place on any serious shortlist for coding, analysis and knowledge work, while the final decision should factor in existing vendor relationships, integration costs and security requirements unique to your organisation.

https://www.anthropic.com/news/claude-opus-4-6-comparison

Enterprise adoption, revenue traction and what it implies for risk

One of the most telling signals around Claude Opus 4.6 is how quickly large organisations are adopting the broader Claude ecosystem. Enterprises such as Uber, Salesforce and Accenture are reported users of Opus 4.6 and associated tools like Claude Code. Within six months, Claude Code alone is said to have reached a $1B annual run-rate, an extraordinary figure for a relatively new product line and a strong sign that enterprises are moving beyond experimentation even amid wider AI industry turbulence.

For Australian executives, this kind of traction matters for two reasons. First, it indicates that global peers are already building real workflows and, in some cases, competitive advantages on top of Anthropic’s stack. Second, it reduces certain categories of risk: a tool that has been hardened in demanding environments is less likely to surprise you with basic reliability problems, though it may still carry strategic risks around vendor concentration and data dependency.

Anthropic also emphasises strong safety alignment and respect for enterprise constraints, including the ability to support cybersecurity assessments and vulnerability patching. While the details of those security capabilities sit outside the scope of this article, the headline for boards is that Opus 4.6 is being shaped in dialogue with large corporate clients rather than solely for individual developers. That alignment shows up not only in features but also in support models, compliance conversations and long-term roadmap commitments.

https://www.anthropic.com/news/claude-code-enterprise

Limitations, evidence gaps and pacing your investment

Despite the impressive benchmark numbers and early enterprise adoption, Claude Opus 4.6 is not a magic solution. Even on tests like MRCR v2, a 76 percent score still means almost one in four complex reasoning tasks are handled incorrectly. In legal, financial or safety-critical contexts, that error rate demands human oversight and clear guardrails around how decisions are made.

Evidence gaps also matter. Public data on latency, long-run stability and cost of large-scale deployments is still thin, and many of the most glowing case studies come from vendors or close partners. Independent testing of safety properties, adversarial robustness and bias is ongoing but incomplete, and there is no universal benchmark that cleanly captures the full cost of ownership over several years.

For boards and executives, the implication is to treat Opus 4.6 as a powerful new capability that should be trialled seriously, yet implemented in stages. Start with high-value but recoverable workflows such as internal analysis, coding assistance or research synthesis, where errors can be detected and corrected. Use those pilots to gather your own numbers on accuracy, speed, staff time saved and incident rates. Those measurements will allow you to decide whether to expand usage, negotiate pricing from a position of knowledge and, if necessary, diversify across more than one model provider.

https://www.anthropic.com/safety

Practical next steps for Australian executives considering Opus 4.6

If you are leading a mid to large organisation in Australia, the decision around Claude Opus 4.6 is less about curiosity and more about sequencing. A practical way forward is to treat the next six to twelve months as a structured evaluation period, not a casual trial. Start by mapping your highest-cost, knowledge-heavy workflows: software delivery, financial modelling, regulatory analysis, or internal research, then align them with the specific AI services your organisation can safely consume.

From there, identify 2 to 3 contained use cases where long-context reasoning and improved multi-source analysis can be measured. For example, assessing how Opus 4.6 handles a large codebase refactor, or a regulatory review involving many overlapping documents, compared with your current tools. Track not only direct token spend but also engineer or analyst hours saved, error rates and time to decision, ideally supported by a specialist AI implementation partner.

Finally, feed these findings into a broader AI investment strategy. Consider whether Opus 4.6 becomes your primary engine for work-like tasks while other models cover specialist roles such as multimodal creativity. Build a governance structure that can adapt as more independent benchmarks emerge and as Anthropic continues to evolve the Claude line. Above all, keep the discussion at board level: this is now a structural technology choice, not just an IT tooling question—and one that can be supported by a secure Australian AI assistant designed for everyday and enterprise tasks.

https://www.anthropic.com/news

Conclusion: deciding if Claude Opus 4.6 is your next work engine

Claude Opus 4.6 arrives at a moment when enterprises are moving from pilots to genuine workflow change. Its advances in long-context handling, complex reasoning and enterprise adoption make it a serious contender to anchor AI-powered work for the next phase of automation. Yet the decision to commit should be evidence-based, paced and aligned with your broader technology and risk strategy including clear thinking about model selection and routing economics.

Over this three-part series, LYFE AI is unpacking Opus 4.6 from strategy to hands-on transformation. If you want support building a structured evaluation program, from benchmark design through to board-ready business cases, now is the time to act. Reach out to our team to design an Opus 4.6 assessment tailored to your organisation, and use Parts 2 and 3 of this series as a companion as you move from high-level strategy to detailed workflow redesign—with options ranging from custom automation and model tuning to fine-grained model comparison work across vendors.

For further background on our approach, explore how Lyfe AI delivers secure, innovative AI solutions, review our terms and conditions, and consult our full library of AI strategy and implementation articles.

Frequently Asked Questions

What is Claude Opus 4.6 and how is it different from previous Claude models?

Claude Opus 4.6 is Anthropic’s enterprise-focused “work” model designed specifically for coding, agents, and complex workflows rather than casual chat. It introduces a 1M-token context window (in beta via API), stronger multi-step reasoning, and improved performance on commercially relevant benchmarks, making it better suited for deep workflow automation inside large organizations.

Why should enterprise leaders care about Claude Opus 4.6 for AI strategy?

Opus 4.6 turns generative AI from an experimental tool into a potential foundational platform for the next 3–5 years of automation and analysis. For boards and executives, it directly impacts AI economics (cost per unit of work), model selection, vendor risk, and how far you can push end‑to‑end workflow transformation across functions like engineering, operations, and knowledge work.

How does Claude Opus 4.6 change the economics of AI-powered work?

By combining a very large context window with stronger multi-step reasoning, Opus 4.6 can handle bigger chunks of work in a single pass, such as whole codebases or long process documents. This can reduce orchestration overhead, lower the number of calls or tools needed per workflow, and ultimately improve total cost of ownership when deployed correctly within enterprise workflows.

How does Claude Opus 4.6 compare to other frontier AI models for enterprise use?

Early benchmarks suggest Opus 4.6 is at or near the top of several commercially important tests such as coding, reasoning, and complex task completion versus rival frontier models. However, the blog emphasizes that real-world performance depends on reliability at scale, integration friction, security posture, and ongoing iteration—not just leaderboard results—so enterprises should test it against their own workloads.

Is Claude Opus 4.6 good enough to be a company’s primary AI work model?

The article argues that Opus 4.6 is the first Claude release explicitly built to sit inside real enterprises as a core work system, rather than a generic chat assistant. Whether it should be your primary model depends on trials against your specific workflows, but its 1M-token context and reasoning gains make it a serious candidate to underpin multi-year automation initiatives.

What are the main limitations or risks of adopting Claude Opus 4.6 in production?

The blog notes that most benchmarks are still synthetic or idealized, so Opus 4.6 must prove itself in messy, real-world edge cases. Key risks include over-relying on lab scores, underestimating integration and change-management costs, and not stress-testing the model for reliability, security, and governance at scale before committing to a single-vendor strategy.

How should executives evaluate Claude Opus 4.6 beyond benchmark scores?

Leaders should run targeted pilots using their own data and workflows, tracking metrics like task completion quality, time saved, error rates, and user adoption. They should also assess security, privacy, integration complexity, and vendor support, building these findings into a broader AI platform strategy rather than deciding purely on benchmark leaderboards.

What role does LYFE AI play in helping companies implement Claude Opus 4.6?

LYFE AI focuses on turning models like Claude Opus 4.6 into real workflow transformation, not just proofs of concept. They help executives define AI strategy, design and implement Opus‑powered workflows, integrate with existing systems, and measure the business impact so organizations can adopt the model safely and at scale.

Can LYFE AI help us decide between Claude Opus 4.6 and other LLMs?

Yes, LYFE AI can run structured evaluations comparing Opus 4.6 to other leading models on your specific use cases, such as coding, document-heavy analysis, or multi-step workflows. They then translate those results into practical recommendations on model choice, architecture, and rollout strategy aligned with your risk appetite and budget.

How do I get started using Claude Opus 4.6 in my organization with LYFE AI?

Typically you start with an executive workshop or discovery engagement where LYFE AI maps your current workflows, identifies high-value automation opportunities, and selects a few Claude Opus 4.6 pilot use cases. From there, they help you set up access (often via API), build and test initial workflows, and create a roadmap for phased scaling across departments.