Introduction: Codex 5.3 and Everyday Organisational Issues

Table of Contents

- Introduction: Codex 5.3 and Everyday Organisational Issues

- What Codex 5.3 Is and Why Organisations Should Care

- Faster, Safer Software Delivery With Codex 5.3

- Cutting Operational Toil and DevOps Overhead

- Strengthening Security, Compliance and Risk Management

- Turning Data, Docs and Processes Into Working Systems

- Hybrid AI Workflows That Fit Australian Teams

- Practical Ways To Start Using Codex 5.3 Today

- Conclusion and Next Steps With Codex 5.3

Most Australian organisations are feeling the same squeeze: short on engineers, long on backlogs. Releases slip. Manual tasks pile up. Security tickets lurk in Jira for months. That is exactly the world Codex 5.3 is built for, especially when paired with a secure Australian AI assistant for everyday tasks that respects local data and compliance expectations.

Codex 5.3 is OpenAI’s latest agent-style coding and workflow model, built on the new GPT-5.3-Codex architecture. It is designed to help organisations solve everyday issues, not just write clever code snippets. Think less “code autocomplete” and more “hands-on digital teammate” that can read tasks, run tools, and report back as it works.

In this guide, we will break down what Codex 5.3 actually is, how it improves software delivery, reduces operations drag, supports security and compliance, and even helps non-technical teams turn messy ideas into working systems. We will focus on real-world patterns for Australian businesses, from fintechs in Sydney to councils in regional NSW, so you can see where it fits in your stack and where specialist implementation services might make sense.

For a deeper sense of how Codex fits into OpenAI’s broader roadmap, see OpenAI’s own introduction to GPT‑5.3‑Codex, which positions the model as a general-purpose coding and agent system, and independent coverage from ZDNet on its 25% speed gains and expanded scope, along with analyses by Ars Technica and the OpenAI developer community’s overview of GPT‑5.3‑Codex, all of which reinforce its shift toward practical, end‑to‑end workflows.

What Codex 5.3 Is and Why Organisations Should Care

Codex 5.3 is an agent-based AI model that combines strong coding skills with broad reasoning. In plain language, it can understand your problem, plan a sequence of steps, use tools like a terminal or IDE, and then carry out those steps while keeping you in the loop. It is tuned for the full software lifecycle: planning features, writing code, debugging, testing, documenting, and basic deployment.

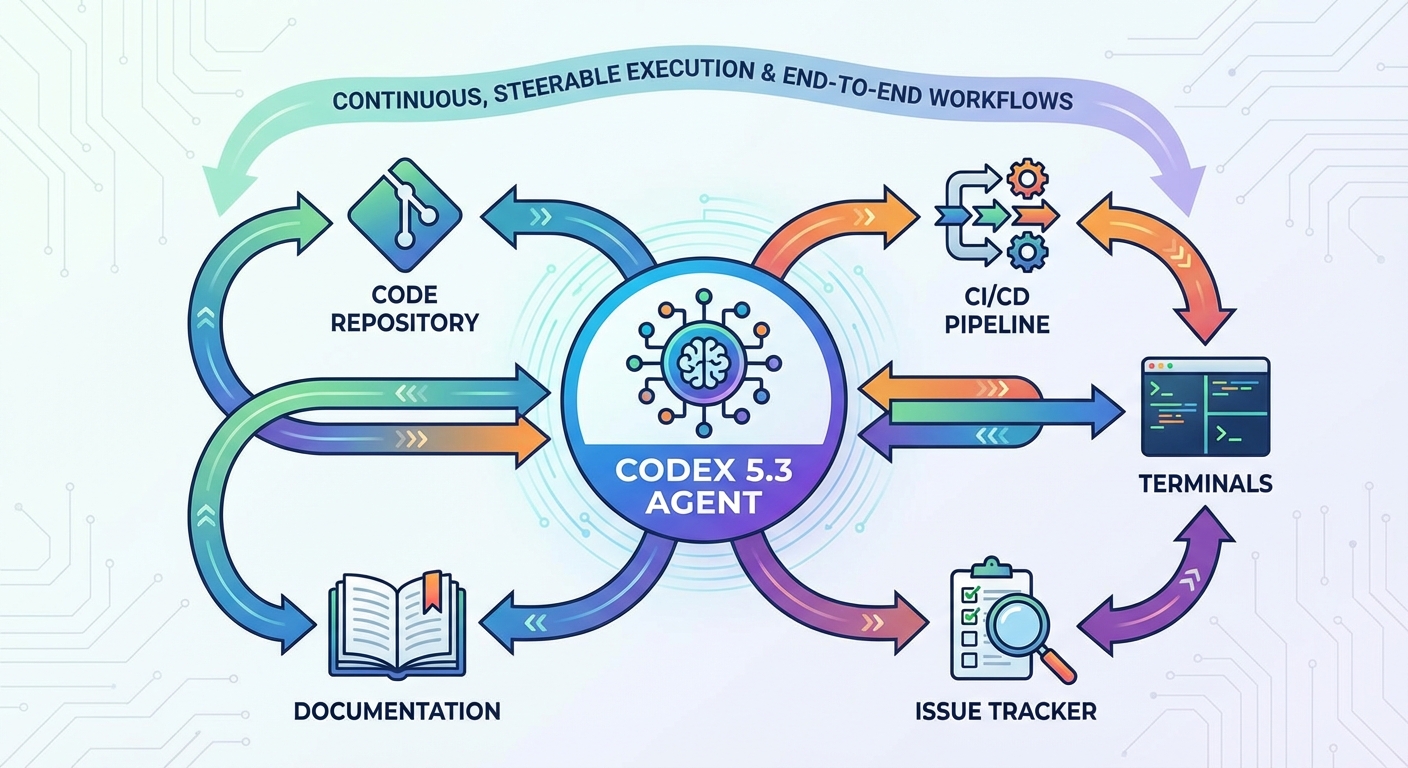

A key difference from older AI tools is its “continuous, steerable execution.” Instead of giving you one long answer and hoping it is right, Codex 5.3 streams its progress: scanning your repo, finding failing tests, proposing patches, running tests again, and summarising results. You can interrupt any time, redirect it, or tighten rules, such as “do not touch production config” or “follow our secure coding standard.”

Under the hood, Codex 5.3 is about 25 percent faster than its previous version and delivers state-of-the-art scores on coding benchmarks like SWE-Bench Pro and Terminal-Bench 2.0, plus high performance on OSWorld-Verified, which tests how well it uses a computer. That mix of speed and reliability matters when you want it to handle long, multi-file changes and OS-level work without constant babysitting.

Interestingly, earlier Codex models were used to help build and debug Codex 5.3. OpenAI ran early versions of Codex 5.3 on its own infrastructure to help debug its training, manage deployment, and diagnose test results. That is a strong internal proof that the same agent pattern can support external organisations in real, messy environments, something also highlighted when OpenAI described how earlier Codex models assisted in building their own systems.

Faster, Safer Software Delivery With Codex 5.3

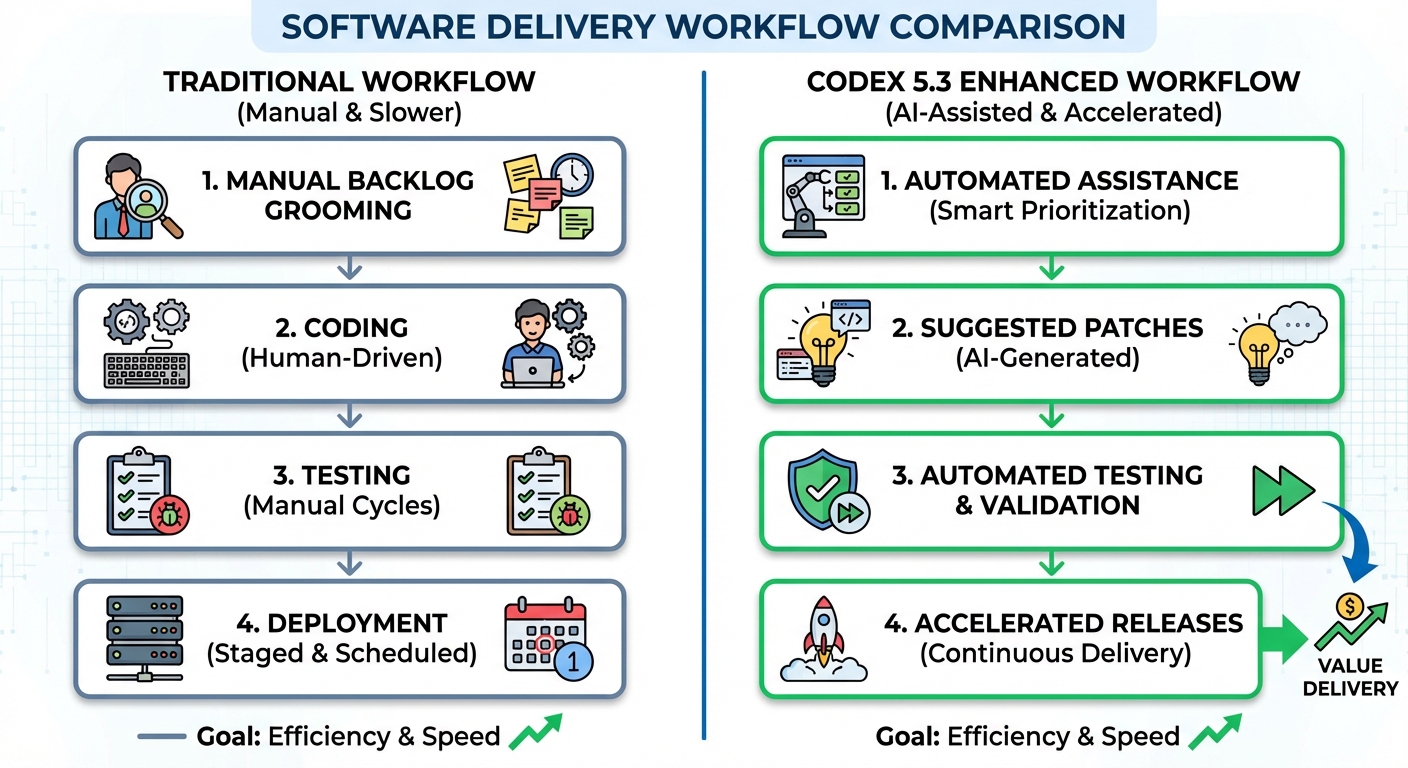

Many Australian teams fight the same battles: feature backlogs, fragile releases, and endless bugfix sprints. Codex 5.3 tackles this by handling multi-step development tasks from end to end. It can turn a product requirement into code, tests, and documentation in one guided workflow.

Picture a product lead at a Melbourne healthtech start-up asking Codex to “implement a read-only dashboard for NDIS claims with these access rules.” Codex can scaffold the feature, create API endpoints, write database queries, and add basic tests. It then runs those tests via the terminal, reports failures, proposes fixes, and keeps going until the suite passes, all while showing progress updates.

The model’s stronger multi-file and multi-language skills mean it can work across large repos that mix TypeScript, Python, and Terraform. It keeps context across long sessions, so it understands the knock-on effects of a change in one service on another. That is far beyond the single-file autocomplete offered by earlier tools, and lines up with third‑party reports that GPT‑5.3‑Codex is explicitly tuned for enhanced code and multitasking capabilities.

Because it runs about 25 percent faster than its predecessor and uses tokens more efficiently, Codex 5.3 also cuts the time and cost of high-volume coding requests. For lean teams in Brisbane or Perth, that means you can push more fixes and features through the pipeline without needing a huge increase in headcount.

It is not magic, of course. Engineers still need to review changes and own design decisions. But by letting Codex handle routine wiring, regression fixes, or tedious refactors, your people can focus on architecture, user experience, and tricky edge cases—or even offload parts of that orchestration to custom AI automation tuned to your repositories and workflows.

Teams that want to compare Codex‑style agents with other coding and reasoning models can draw on evaluations like the OpenAI O4‑mini vs O3‑mini analysis or the broader GPT‑5.2 vs Gemini 3 Pro comparison to decide where Codex 5.3 fits in their model mix.

Cutting Operational Toil and DevOps Overhead

Operations and DevOps teams across AU often live in “ticket hell”: constant small requests, brittle pipelines, and after-hours fire fights. Codex 5.3 is tuned for this world too, not just feature work.

As an agent-style model, Codex can operate terminals, edit config files, and interact with infrastructure-as-code. For example, you can ask it to “update our CI pipeline to run security scans on every pull request and roll back on failure.” It can then modify the YAML, adjust Terraform or CloudFormation scripts if needed, and run tests to confirm things still build.

In more advanced setups, Codex can execute actual terminal commands in a controlled environment. That makes it useful for cleaning up logs, rotating credentials, or standardising service restarts. The frequent progress updates make this far less scary than a black-box script: you see every step and can hit stop instantly if something looks off.

A powerful idea here is “automated O and M” – operations and maintenance handled by an AI agent that has both coding and OS skills. OpenAI has already used an internal GPT-5.3-Codex model to help scale GPU clusters and debug production infrastructure, challenges that are thematically similar to those faced by large banks, telcos, and government departments in Australia, even if not documented as a direct one-to-one match..

For a small DevOps crew in, say, a regional WA utility, the value is practical: fewer repetitive config changes, faster recovery from common incidents, and clearer runbooks that Codex can help write and then follow. Human engineers still handle approvals and complex judgment calls, but the daily grind gets lighter, especially when you combine Codex with professional AI integration and operations support rather than relying on ad‑hoc experiments.

To keep an eye on how these agent patterns evolve across industries, it is worth tracking vendor roadmaps and curated resources such as Lyfe AI’s AI automation article sitemap, which surfaces new patterns for DevOps, SRE, and infra teams.

Strengthening Security, Compliance and Risk Management

Security teams in Australian organisations, especially in finance and government, face constant pressure. More code, more integrations, more risk – but not always more people. Codex 5.3 brings new tools to this space.

It is the first OpenAI model that OpenAI’s Preparedness Framework has officially rated as having “High capability” in cybersecurity, specifically in vulnerability detection. That means it is specifically assessed and tuned to spot risky patterns in code. You can point it at a repository and ask it to surface potential injection flaws, insecure cryptography, or weak access control, then generate patches and tests to harden those areas.

Because it supports mid-turn steering, security leads can set guardrails like “follow our ASD Essential Eight controls” or “never suggest disabling input validation.” During a long refactor, they can pause Codex, ask why it chose a certain approach, and reject or refine changes that clash with internal policies or regulator expectations.

The model’s strong benchmark performance on OSWorld-Verified also matters here. It shows that Codex can use a desktop environment with a level of skill approaching human users, which is useful for tasks like hardening admin consoles or validating desktop security settings.

Of course, adopting any AI in a regulated space means risk assessments, data handling controls, and clear logs. But in a hybrid model, Codex 5.3 can become a force multiplier for overworked security engineers, helping them triage findings, generate secure-by-default patterns, and keep pace with both internal change and external threats—while still aligning with documented AI risk concerns such as the unprecedented cybersecurity risks raised around GPT‑5.3‑Codex.

Australian organisations often formalise such controls through clear usage terms and governance; pairing Codex with frameworks similar to Lyfe AI’s own AI service terms and conditions helps keep security and compliance teams comfortable as adoption scales.

Turning Data, Docs and Processes Into Working Systems

Not every everyday issue in an organisation is a pure coding problem. Many start as messy documents, rough spreadsheets, or a long email chain about “how we do things here.” Codex 5.3 is built to bridge that gap between words and working software.

Because it fuses strong coding ability with broad reasoning, Codex can read PRDs, policy documents, or even meeting notes, then turn them into practical outputs: scripts, dashboards, or simple internal tools. A council in Victoria, for example, might feed in their procedure for handling planning applications and ask Codex to build a prototype workflow tool that tracks each step and sends the right notifications.

Across the software lifecycle, Codex can support work like writing PRDs, editing copy, generating test cases from acceptance criteria, and adding monitoring hooks where your current docs say “watch this carefully.” This is where non-engineering teams start to feel the benefits, because their words become the blueprint for real automation.

The larger effective context window also helps here. While exact limits are not published, Codex 5.3 is described as managing long, multi-file tasks and extended sessions. That means it can hold more of your domain context in its “head” while it works, producing systems that reflect your actual business rules, not just generic examples from the internet.

When paired with internal reviews and user testing, this approach can turn months-long “requirements translation” projects into shorter, more iterative cycles. Teams describe what they need in plain English, Codex builds a first pass, and everyone refines from there instead of starting from a blank page.

Organisations wanting help with this translation layer often turn to specialist AI partners who understand both local regulation and automation design, allowing domain experts to stay in charge while AI handles the heavy lifting of turning documents into running workflows.

Hybrid AI Workflows That Fit Australian Teams

One important point: Codex 5.3 is not meant to replace every AI assistant in your organisation. It shines in coding-heavy and agentic workflows, but you will still want general-purpose assistants for broad conversation and non-technical tasks.

The research recommends hybrid setups. For latency-sensitive autocomplete, like single-line suggestions inside VS Code, it can make sense to keep lightweight local tools or simpler cloud models. Then use Codex 5.3 through the Codex app, CLI, or IDE extensions when you need complex work such as multi-file refactors, terminal tasks, or guided deployments.

This pattern fits existing Australian stacks well. Many organisations already run a mix of cloud-hosted tools and on-prem systems. Codex slots in via ChatGPT paid plans, IDE plugins, or dedicated CLI tooling. An API is also planned, which will open up more custom integration options.

Under the hood, Codex 5.3 runs on scalable infrastructure designed to support parallel agents and advanced features like self-debugging and infra automation. OpenAI has used it internally for GPU scaling and cache issues, which are complex, high-value problems. In a local setting, you might apply the same pattern to scaling container clusters or keeping CI runners healthy.

The key is to start with realistic goals: let Codex handle the “long, annoying” tasks that humans dislike but still own the architecture, priorities, and approvals yourselves. Over time, as you trust its behaviour and tune your workflows, you can expand the scope to cover more of your software and operations lifecycle—with expert help where needed from enterprise-focused AI workflow services that know how to stitch different models together.

For teams optimising cost and latency across this hybrid stack, model selection guides such as Lyfe AI’s breakdown of GPT‑5.2 Instant vs Thinking pricing and routing offer a useful playbook for deciding when to reach for heavy agent models like Codex.

Practical Ways To Start Using Codex 5.3 Today

Turning Codex 5.3 from a shiny concept into a daily tool does not need to be complicated. A focused rollout with a few clear use cases is far more effective than a big-bang launch.

First, pick two or three “everyday issues” that are frequent and annoying but low-risk. Good candidates include flaky tests, small internal tools that never get built, or repetitive CI config changes. Use the Codex app or IDE extension to let engineers and power users run guided sessions and record what works.

Second, standardise prompts and guardrails. Create templates such as “You are our secure coding assistant. Follow these rules…” and include your internal guidelines. Document simple playbooks: how to ask Codex for a refactor, how to review its changes, and when to stop and call a human expert.

Third, measure results. Track metrics like time to fix certain classes of bugs, number of manual steps removed from a deployment, or security issues found per review. Codex 5.3’s 25 percent speed gains and strong benchmark scores give a useful baseline, but your own data will convince stakeholders in finance, risk, and the boardroom.

Finally, invest in training. Run short internal workshops where developers, analysts, and even operations staff try Codex on real tasks with a coach in the room. The model’s value appears most clearly when people learn to steer it mid-task instead of treating it as a one-shot answer engine—and when they have access to a trusted Australian AI assistant they can safely use outside pure engineering work.

To see how Codex-style capabilities compare with adjacent models you might already be piloting, it can be helpful to review summaries like the Laravel News overview of GPT‑5.3‑Codex for agent-style development, which anchors many of the practical patterns outlined here.

Conclusion and Next Steps With Codex 5.3

Codex 5.3 is not just another coding helper. It is an agent-style model built to tackle the everyday issues that slow Australian organisations down: sluggish releases, operations toil, security debt, and the long gap between “idea” and “running system.”

By combining strong coding performance, tool use, real-time steering, and lifecycle coverage from PRD to deployment, it offers a practical way to scale your impact without simply adding more people. Used well, it becomes a calm, tireless teammate that handles the grind while your staff focus on design, strategy, and customers.

If you are ready to explore Codex 5.3, start small: choose a few high-friction workflows, pilot agentic sessions with clear guardrails, and measure the gains. From there, expand into DevOps, security, and cross-team automation. The organisations that move first – and learn fastest – will set the pace for AI-powered delivery in Australia, often in partnership with local AI specialists who can help weave Codex into broader transformation roadmaps.

For a broader landscape view as you plan that roadmap, resources like Lyfe AI’s GPT‑5.2 vs Gemini 3 Pro guide sit alongside OpenAI’s own public positioning of GPT‑5.3‑Codex to help you choose the right mix of models, costs, and capabilities for your organisation.

Frequently Asked Questions

What is Codex 5.3 and how is it different from earlier Codex versions?

Codex 5.3 is OpenAI’s latest GPT‑5.3‑Codex model designed as an agent-style assistant that can read tasks, call tools, and work through workflows, not just autocomplete code. Compared with earlier Codex versions, it’s faster, more accurate on real-world software tasks, and better at orchestrating end‑to‑end processes across code, data, and internal systems.

How can Codex 5.3 help my organisation with everyday software delivery issues?

Codex 5.3 can turn tickets and requirements into working code, tests, and documentation, reducing time spent on boilerplate and repetitive fixes. It can also help triage bugs, propose patches, and update CI/CD pipelines, freeing engineers to focus on higher‑value features and architecture decisions.

What are practical examples of Codex 5.3 in IT operations and support?

In operations, Codex 5.3 can read logs, correlate alerts, suggest remediation steps, and even run pre‑approved scripts to resolve common incidents. It can also automate routine tasks like user provisioning, configuration changes, and report generation by integrating with your existing ITSM and monitoring tools.

Can Codex 5.3 be used by non‑technical teams like finance, HR, or marketing?

Yes. Non‑technical teams can describe their goals in plain language—such as building a basic workflow, cleaning data, or generating standard reports—and Codex 5.3 can design the underlying logic, scripts, or integrations. When wrapped in a secure assistant like LYFE AI, staff interact through a simple chat interface while the model handles the technical heavy lifting in the background.

How does Codex 5.3 support security and compliance in an organisation?

Codex 5.3 can help teams scan code for common vulnerabilities, draft remediation steps, and automate checks against secure coding guidelines. Paired with a governed environment such as LYFE AI’s Australian‑hosted assistant, it can also help enforce access controls, create audit trails of AI activity, and support local regulatory requirements around data handling.

Is Codex 5.3 safe to use with sensitive Australian business data?

Codex 5.3 itself is a model, so data safety depends on how it’s deployed and governed. LYFE AI offers a secure Australian AI assistant layer that keeps data within compliant infrastructure, applies role‑based access, and ensures prompts, outputs, and tool calls are logged and controlled according to your organisation’s risk and privacy policies.

How does Codex 5.3 compare to using a general GPT model for coding and automation?

General GPT models are strong at language, but Codex 5.3 is optimised for code understanding, tool use, and multi‑step technical workflows. It’s typically better at reading large codebases, working with APIs and DevOps pipelines, and acting as a hands‑on agent that can plan, execute, and verify technical tasks end‑to‑end.

What everyday problems can Codex 5.3 solve for small and mid‑sized Australian businesses?

For SMEs, Codex 5.3 can automate reporting, streamline manual data entry, build small internal tools, and keep websites or integrations up to date without hiring large engineering teams. It’s particularly useful for clearing backlogs of low‑risk work—like scripts, connectors, and documentation—so staff can focus on customers and strategy.

How do we actually get started implementing Codex 5.3 in our organisation?

Most teams start by picking 1–3 narrow use cases, such as automating a recurring report or triaging support tickets, and then integrate Codex 5.3 behind an internal chat or workflow tool. LYFE AI can help with use‑case selection, secure deployment in Australian infrastructure, integration with your systems, and change management so staff adopt the assistant safely and effectively.

Why work with LYFE AI instead of using Codex 5.3 directly from OpenAI?

Using Codex 5.3 directly gives you a powerful model but leaves security, compliance, access control, and real‑world workflow design up to your team. LYFE AI provides a secure Australian‑hosted assistant, governance frameworks, and implementation services tailored to local regulations and business practices, so you get value faster with lower operational and compliance risk.