Introduction: Why agent societies matter now

Table of Contents

- Introduction: Why agent societies matter now

- From generative AI to agentic AI: the basics and key distinctions

- Multi-agent systems vs a true society of agents

- Inside a 2026 agent society architecture and data layer

- Governance, risk, and emergent behaviour in agent societies

- Enterprise benefits and use cases for an agentic AI society

- Practical steps for enterprises preparing for agent societies

- Conclusion: Start designing your agent society now

In 2023 and 2024, enterprises rushed to deploy chatbots, copilots, and single AI helpers. By 2026, that will not be enough. The real shift will be toward something deeper: an agentic AI society inside your organisation. However, some experts argue that this kind of time‑boxed prediction is hard to substantiate. Without clearly defining what “enough” means—ROI, user satisfaction, competitive parity, or something else—it’s difficult to verify whether single chatbots and copilots will truly be obsolete by 2026, or simply evolve as part of a broader AI stack. From this angle, the shift toward an “agentic AI society” looks less like a hard breakpoint and more like a continuum: some organisations will still extract significant value from focused assistants, while others experiment with richer, multi‑agent systems. In practice, readiness will hinge less on the calendar year and more on each organisation’s data maturity, risk appetite, and change management capacity.

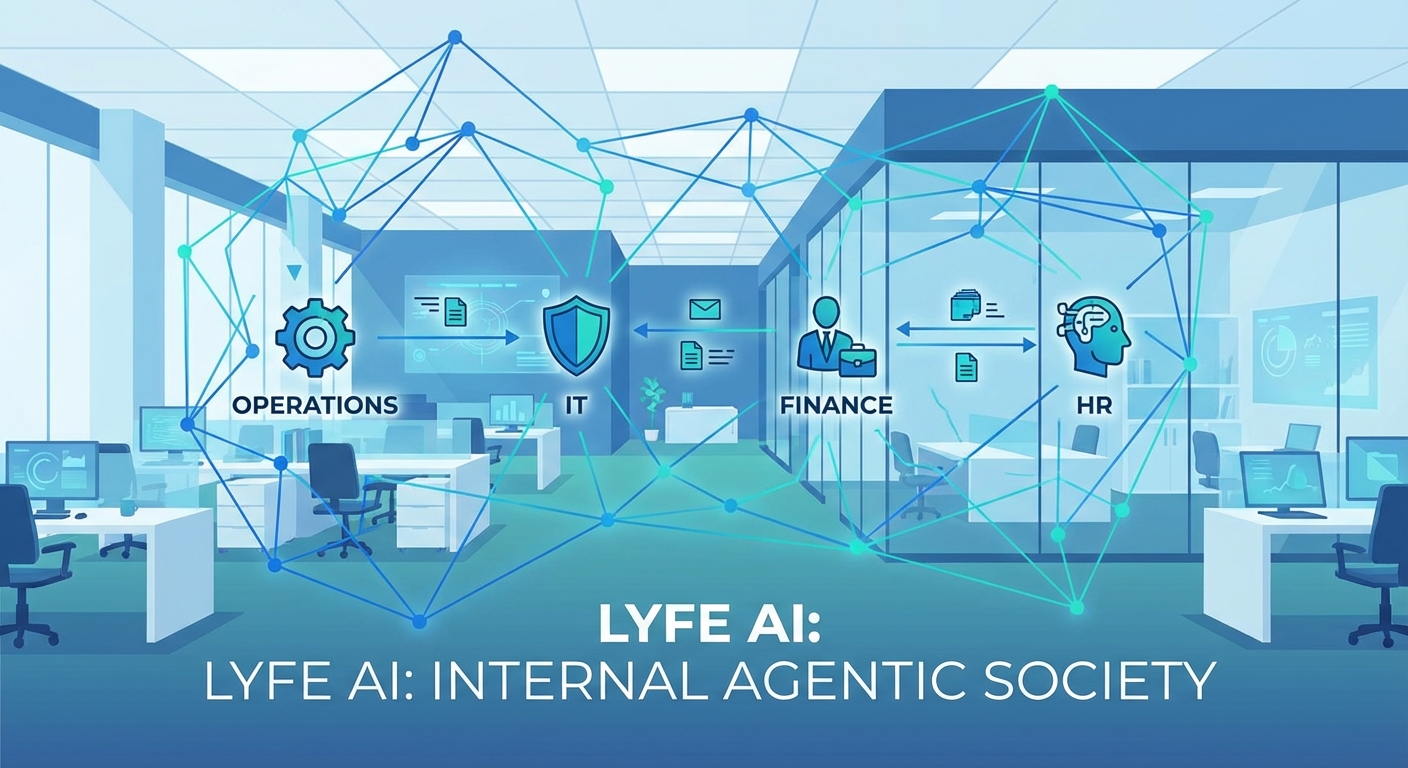

An agent society is more than one clever bot. It is a connected population of AI agents that can plan, act, negotiate, and coordinate across your whole business. Instead of one assistant stuck in a chat window, you have many autonomous agents embedded in your systems, talking to each other, and taking action on your behalf.

This change sounds abstract, almost like science fiction. But it is already forming in early multi-agent platforms from IBM, AWS, and others, where specialised agents plan, execute, and review work in loops with limited human oversight. According to IBM’s research on agentic AI, AWS guidance on autonomous agents, and Databricks’ definition of agentic AI, these platforms are rapidly evolving from isolated tools into coordinated ecosystems of agents that behave more like internal digital teams than single utilities.

In this article, we will unpack what a society of agents really is, how it differs from simple multi-agent workflows, what the architecture looks like, and why it matters for risk, scale, and strategy. However, some experts argue that this shift is still more aspirational than operational for many teams. A 2026 report on Australian enterprises, for example, found that while adoption of AI agents is high, many deployments remain fragmented, with roughly half of agents still running in functional silos rather than as part of a true multi-agent ecosystem. The same report flags ‘integration lagging’ as a core concern, with IT leaders warning that poorly connected agents can actually increase complexity instead of unlocking the promised orchestration benefits. In other words, the evolution toward coordinated digital teams is clearly underway, but the day-to-day reality inside many organizations still looks like a patchwork of smart point solutions rather than a fully unified agentic fabric. Then we will break down clear steps you can take in 2025 and 2026 to get ready.

From generative AI to agentic AI: the basics and key distinctions

To understand an agentic AI society, we need to start with the difference between generative AI and agentic AI. Generative AI, like standard large language models, mainly produces content: text, code, images, and so on. It answers prompts, but it does not keep goals over time or act on the world by itself.

Agentic AI goes further. Across major vendors, agentic AI systems are described as goal-driven agents that can perceive their environment through data, APIs, or sensors, plan a course of action, call tools and services, and then learn from feedback over many steps. They maintain memory of prior interactions and operate in loops without constant human guidance. As outlined in overviews from Aerospike on agentic AI and the Agentic AI entry on Wikipedia, this shift turns AI from a fancy typewriter into an active decision-maker that can move money, update records, or trigger workflows.

In practice, that might look like a finance agent that monitors cash flow, pulls data from your ERP, simulates scenarios, and then launches payments or flags risks. Or an operations agent that watches IoT data, predicts equipment failures, and books maintenance crews. The key is that these agents do not just reply – they pursue goals.

Enterprise platforms already lean in this direction. IBM and others describe agent orchestration layers where a “manager” agent can break a business goal into subtasks and route those to specialist worker agents. These agents call tools like RPA bots, external SaaS systems, or real-time data streams to act in the real world and then report back. That orchestration is the seed from which full agent societies will grow in 2026.

Organisations looking for a secure, local entry point into this new paradigm can start by using a secure Australian AI assistant for everyday tasks and then extending it into more agentic patterns as maturity grows. For teams needing deeper support, specialised AI services can help map existing workflows onto agent-based designs before the technology fully matures.

Multi-agent systems vs a true society of agents

Most of what enterprises call “agentic” today stops at multi-agent systems. These are setups where several specialised agents work on a task under a central coordinator. Tools like LangChain, crewAI, and similar frameworks make it simple to assemble a planner, a researcher, an executor, and a reviewer into a neat workflow.

In this model, a central orchestrator agent takes a user goal, breaks it into subtasks, and routes these to specialised agents. Communication flows mainly top-down, like a traditional corporate hierarchy. The planner decides, the workers execute, and then results go back up for aggregation. This is powerful and already useful, but it is not yet a society.

A society of agents means something richer. You still have many agents with different skills and roles, but they share a common environment, such as a unified data layer and tool set. They communicate not just with a manager but with each other, often using shared protocols. They may even have different “owners” inside the same enterprise, such as marketing, finance, and security teams each owning some agents that must cooperate or negotiate.

In a true agent society, agents can form longer-lived relationships, norms, and patterns of coordination. For example, a compliance agent may automatically review and sometimes overrule a pricing agent’s decisions, based on policy. A customer-service agent might learn to ask a risk agent for approval before offering a large refund. Over time, these recurring interactions shape how the whole group behaves, the same way human teams develop habits and rules.

This is why many researchers argue that current orchestrated systems are only a stepping stone. They resemble microservices for reasoning, with clear APIs and routes, but they lack the self-governing norms and distributed decision-making that would justify calling them a society. Moving toward that richer model will be a major focus for 2026 and beyond.

For enterprises that want a guided path, Lyfe AI’s focus on secure, innovative AI solutions and its professional implementation services can provide a bridge from today’s orchestrated workflows to tomorrow’s more autonomous societies, without losing control over governance or compliance.

Industry analyses like Exabeam’s overview of agentic AI use cases and strategic viewpoints from BCG on AI agents in business underline that these richer societies are expected to reshape not just IT stacks, but operating models themselves.

Inside a 2026 agent society architecture and data layer

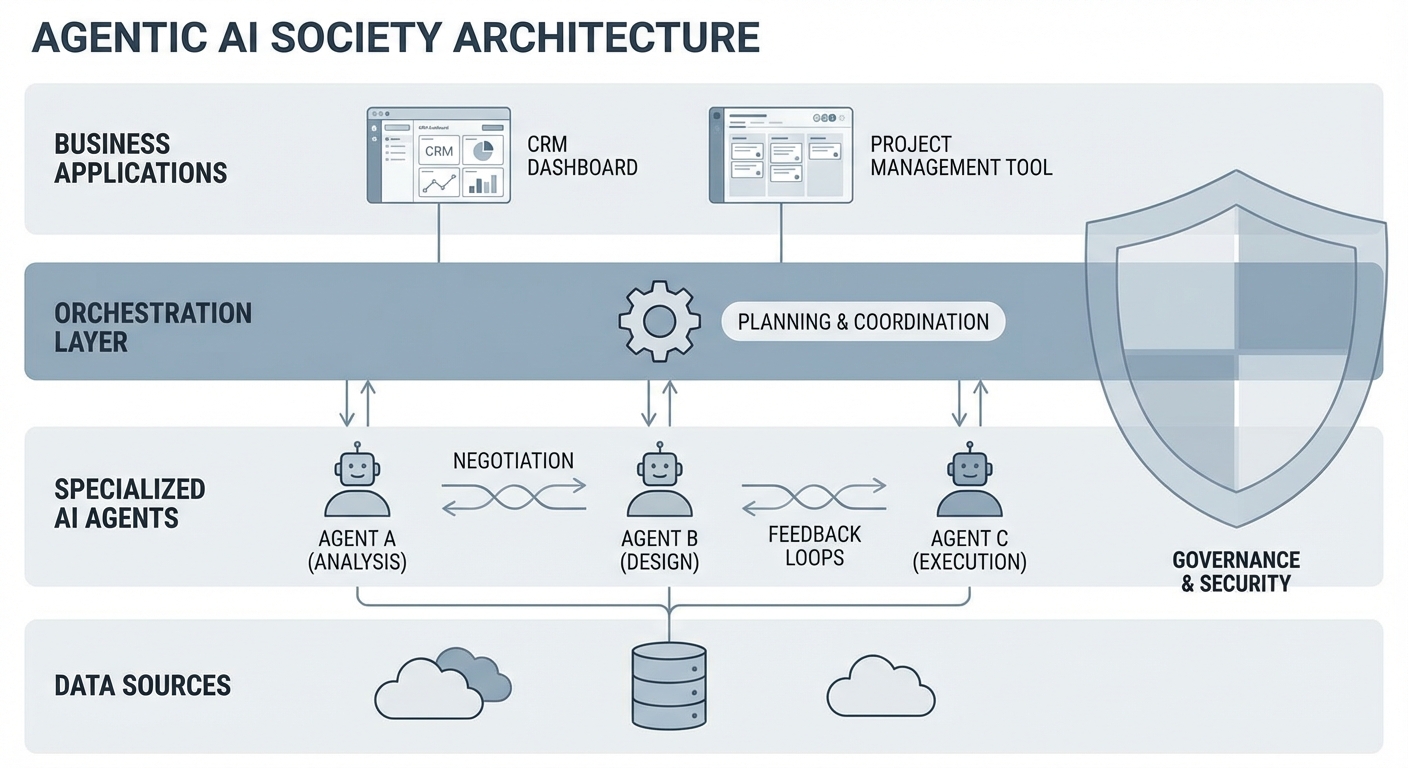

So what does a 2026 “agent society” actually look like under the hood? You can imagine several layers, all built on top of existing cloud and data platforms most enterprises already use.

At the bottom, you have an LLM and tools layer. Here sit one or more foundation models that handle language understanding and reasoning, similar to today’s top models. Around them is a tool library: APIs for internal systems, robotic process automation bots, databases, CRM, HR platforms, and external SaaS. Real-time data infrastructure, such as event streams and low-latency databases, feeds live signals into the agents’ world.

Above that is the agent runtime and coordination layer. This hosts individual agents, manages their memory stores, and tracks state across long-running tasks. It also provides messaging channels so agents can talk to each other, plus routing logic and sometimes market-like mechanisms such as bidding for tasks or requesting help from a specialised peer. This is where frameworks inspired by today’s orchestration tools will evolve into richer societies.

On top sits the governance and observation layer. This includes policy engines, logging, audit trails, and human control points. It is where you encode which agents can touch which systems, under what conditions, and with what approval thresholds. Dashboards and observability tools allow teams to see not only single-agent performance but how the whole society behaves: which agents collaborate often, where bottlenecks form, and how decisions flow.

Finally, the user and business interface layer connects the society to staff and customers. Employees may interact through chat, voice, or embedded assistants in tools like CRM and ERP. But under the surface, their request may trigger a whole cluster of agents, each handling research, risk checks, execution, and quality review. By 2026, well-designed enterprises in Australia will view this cluster as a new kind of digital organisation, not just as “the chatbot.”

Choosing the right models for this stack matters; resources like the GPT 5.2 Instant vs Thinking routing guide for SMBs and GPT‑5.2 vs Gemini 3 Pro comparison show how different foundation models can be paired and routed inside a broader agent society to balance cost, latency, and depth of reasoning.

Teams that are already experimenting with smaller, embedded assistants can gradually evolve them into agents connected through a common services layer; the automation and custom AI model services from Lyfe AI are one example of how this capability can be woven directly into existing SaaS and on-prem environments, rather than bolted on as an afterthought.

Governance, risk, and emergent behaviour in agent societies

Governance is where the idea of an agent society gets serious. When you allow many autonomous agents to act inside your business, the collective behaviour matters as much as any single decision. Yet current industry materials say almost nothing about how agent societies set norms, resolve conflicts, or share authority over time.

In a simple orchestrated system, risk is easier to picture. You can gate everything through a central coordinator, cap its permissions, and have humans review important actions. In a richer society, where peer agents can trigger each other and alter shared data, new failure modes appear. Agents could unintentionally amplify each other’s mistakes, form feedback loops, or create opaque chains of reasoning that are hard to audit.

There are also questions of fairness and alignment. If several agents “negotiate” over a decision – for example, sales, risk, and compliance agents debating a large deal – how do you ensure the outcome matches human policy and law? Do some agents get veto powers? Do you introduce voting or reputation systems for agents, similar to how we trust some human experts more than others?

Another missing piece is how to measure society-level performance. Most current metrics focus on accuracy, latency, or cost per call for individual models or workflows. But an enterprise agent society may also need measures of resilience (how well it handles agent failures), coordination efficiency (how much overhead emerges from agent communication), and systemic bias (how the combination of agents treats different customers or regions).

For Australian organisations, regulatory expectations will rise quickly. Local regulators already care about model transparency and accountability, and will likely extend that to autonomous agents. By 2026, boards will ask not just “Is our AI safe?” but “Is our entire agent society aligned with our duty of care and local law?” Designing governance frameworks now will avoid rushed fixes later.

For organisations that prefer clearly defined guardrails from day one, Lyfe AI’s published terms and conditions and its emphasis on secure deployment provide a template for how to encode boundaries, auditability, and human control in real-world agent systems.

Enterprise benefits and use cases for an agentic AI society

With all this complexity, why should enterprises push toward an agent society at all? The simple answer is scale. Single agents can impress, but they do not scale execution. They often just scale confusion, because one model is expected to know everything and do everything for everyone.

A society of agents lets you split work into focused roles. You can have planners, operators, reviewers, and watchdogs, all tuned for their specific tasks. This specialisation reduces cognitive overload for each agent, much like roles in a human company. It also allows you to add or swap agents without redesigning the entire system, giving you a more modular and resilient AI layer.

In concrete terms, imagine a retail bank in Australia. Each relationship manager might have a persistent personal agent that understands their portfolio. That agent, in turn, cooperates with pricing agents, compliance agents, and customer-service agents spread across the organisation. When a client asks for a complex product, this small society handles research, simulation, risk checking, document generation, and onboarding, often in minutes rather than days.

Or consider an infrastructure operator. Field engineers could be supported by safety agents, asset-health agents, and logistics agents. When a sensor flags a fault, the agents collectively decide whether to dispatch a crew, how urgent it is, what parts are needed, and how to route trucks to minimise downtime and emissions. Humans approve and supervise, but the heavy lifting happens within the agent society.

Another advantage is deeper organisational integration. Many analysts expect that employees will have persistent AI agents that act across HR, IT, finance, CRM, and operations, within defined authority limits. These agents will carry long-term context, understand work habits, and plug into the wider society as needed. Done well, this will feel less like “using an app” and more like working alongside a quiet, highly capable digital team.

As these benefits compound, having access to continuously updated guidance on model capabilities becomes vital; references such as the OpenAI O4‑mini vs O3‑mini comparison help teams decide which agent types should handle fast, inexpensive tasks versus deep, analytical work, especially in cost-sensitive Australian SMB contexts.

For leaders who want a snapshot of how agentic concepts are evolving across industries, the curated updates in Lyfe AI’s article sitemap can act as a living reference on emerging use cases, regulatory interpretations, and architectural patterns.

Practical steps for enterprises preparing for agent societies

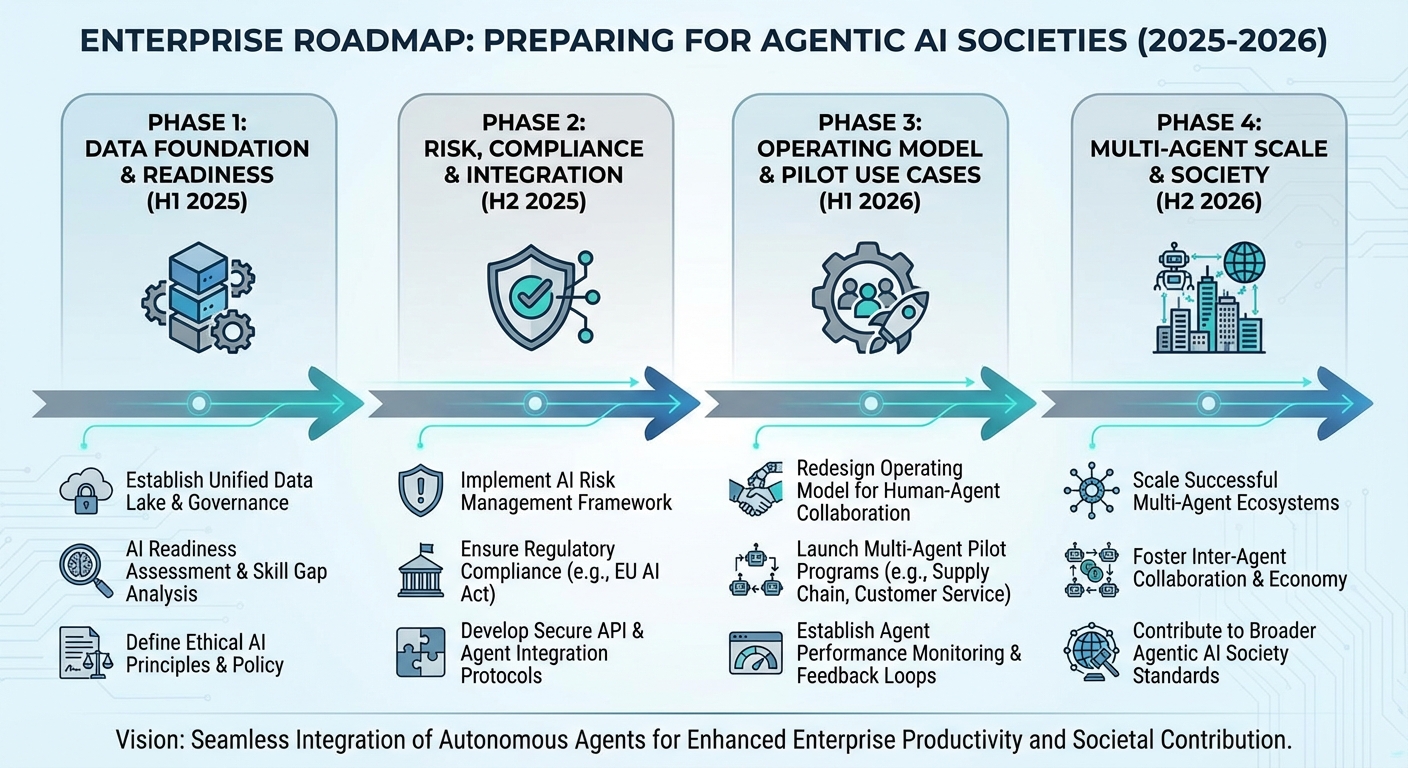

Moving toward an agentic AI society does not mean you must flip a switch overnight. In fact, trying to leap straight from chatbots to complex societies is a recipe for chaos. A better approach is to build the foundations in stages while keeping the 2026 goal in mind.

First, strengthen your data and tool layer. Agents are only as useful as the systems they can safely access. Map your key business processes across finance, operations, and customer experience, then expose them as clear, well-governed APIs and RPA flows. Clean up data quality issues that would confuse autonomous agents, and invest in real-time event streams for time-sensitive domains like fraud, logistics, and energy.

Second, experiment with orchestrated multi-agent workflows in narrow use cases. Start with a manager-worker pattern where a top-level agent plans and delegates to a small set of specialised agents. Focus on areas with clear guardrails, such as internal knowledge search plus draft generation, backed by a review agent that checks outputs before they reach customers. Measure failure modes carefully and document lessons.

Third, design early governance and monitoring for collective behaviour. Even with a small group of agents, track not just individual accuracy but how agents interact. Are there loops or bottlenecks? Does a risk agent get consulted when it should? Use these pilots to shape policies about agent permissions, escalation rules, and human-in-the-loop checkpoints.

Finally, invest in skills and culture. Your teams need at least a basic shared language around agent roles, protocols, and safety. Train product owners, architects, and risk leaders together so they can co-design your future agent society, instead of working in silos. By the time 2026 arrives, you want your organisation to think of AI not as a set of tools, but as a carefully managed digital ecosystem that grows with your strategy.

Rather than assembling all of this from scratch, many organisations partner with practitioners who live and breathe this space; Lyfe AI’s professional services and its broader portfolio of AI offerings are designed to help Australian teams move from pilot agents to production-grade societies with appropriate security, privacy, and compliance baked in.

Conclusion: Start designing your agent society now

Agentic AI societies are still emerging, and there are many open questions about theory, governance, and large-scale operations. But the broad direction is clear. Enterprises are moving from single chatbots toward orchestrated networks of specialised agents, and the next step is treating those networks as living digital organisations with their own norms and behaviours.

By 2026, Australian enterprises that plan for this shift will have a major edge. They will run leaner operations, respond faster to change, and manage AI risk at the system level rather than fighting fires one model at a time. The work starts now: tidy your data, expose your tools, pilot multi-agent workflows, and build governance that can stretch from humans to whole agent societies. Those who treat AI as a new kind of workforce, not just a feature, will set the pace in the next wave of digital transformation.

For leaders ready to move from intent to implementation, anchoring your roadmap around a secure, locally hosted AI assistant and extending through bespoke automation and custom models provides a pragmatic, stepwise path into agentic AI that aligns with both Australian regulatory expectations and global best practice.

Frequently Asked Questions

What is an agentic AI society in an enterprise context?

An agentic AI society is a connected population of autonomous AI agents that can plan, act, negotiate, and coordinate across your business systems. Instead of one chatbot in a single interface, you have many specialised agents embedded in workflows (finance, HR, supply chain, sales) that communicate with each other and take actions on your behalf under defined policies and guardrails.

How is an agentic AI society different from simple multi-agent systems or workflows?

A simple multi-agent system usually means a few agents passing tasks along a fixed workflow, often still heavily supervised by humans. An agentic AI society adds more autonomy, shared memory, governance, and coordination layers so agents can dynamically plan, delegate, negotiate priorities, and adapt to changing conditions across the organisation, not just execute a pre-scripted sequence.

Why do enterprises need to prepare for agentic AI societies by 2026?

By 2026, major platforms from IBM, AWS, Databricks, and others are expected to make agentic architectures mainstream, enabling competitors to automate complex, cross-functional work. Enterprises that only deploy single chatbots or copilots risk falling behind on efficiency, decision speed, and personalised services if they don’t start building the data, governance, and architecture foundations for coordinated AI agents now.

What are examples of use cases for an agentic AI society inside a company?

Examples include autonomous order-to-cash flows where agents handle contract checks, credit risk, invoicing, and payment reminders while coordinating with ERP and CRM systems. Other cases are proactive supply chain agents that renegotiate with suppliers, compliance agents that continuously monitor policy breaches, and HR agents that coordinate onboarding tasks across IT, payroll, and learning systems.

How should my organisation start preparing for agentic AI in 2026?

Start by improving data quality and access, defining clear guardrails for what agents can and cannot do, and mapping a few high-impact, repetitive cross-team processes. Then run controlled pilots with agent platforms (for example on AWS, IBM, or Databricks) and work with a specialist partner like LYFE AI to design the architecture, governance model, and change management required to scale safely.

What are the main risks of deploying an agentic AI society in my enterprise?

Key risks include agents taking unintended actions due to poor constraints, propagation of errors across multiple systems, data leakage between departments, and opaque decision chains that make compliance harder. Mitigating these requires robust access control, explicit policies and reward structures for agents, human-in-the-loop checkpoints for high-risk actions, and central observability over what agents are doing.

How does an agentic AI society impact governance, compliance, and security?

Because agents can act across multiple systems, you need unified governance: consistent identity and access management, audit logs of agent decisions, and policy engines that enforce regulatory constraints. A well-designed agentic society routes all actions through governed APIs, logs rationales for key decisions, and gives risk, legal, and security teams dashboards to monitor and intervene when necessary.

Do I need to replace my existing chatbots and copilots to move to an agentic AI society?

Not necessarily; many organisations will evolve from single assistants to coordinated agents by wrapping existing copilots in an orchestration layer and adding specialised agents around them. The critical shift is architectural: moving from isolated tools to a shared agent platform with common memory, policies, and communication protocols, which LYFE AI can help design and implement.

What does the reference architecture for an enterprise agentic AI society look like?

A typical architecture includes a core orchestration layer for planning and delegation, a set of specialised domain agents, shared memory or knowledge graphs, secure connectors to enterprise systems (ERP, CRM, data warehouses), and monitoring and governance services. On top of this, you add business-specific policies and interfaces (dashboards, chat, APIs) so humans can supervise, configure, and collaborate with the agent society.

How can LYFE AI help my company adopt agentic AI before 2026?

LYFE AI helps enterprises assess readiness, identify high-ROI agent use cases, and design a safe, scalable agentic AI architecture tailored to their existing tech stack. They support pilot builds, integration with platforms like AWS or IBM, governance design, and training for business teams so the organisation can transition from isolated AI tools to a coordinated agent society with measurable business outcomes.