New Claude Opus 4.6: What Actually Changed?

Table of Contents

- New Claude Opus 4.6: What Actually Changed?

- Key Features: Long Context, Agents, and Fast Mode

- Should You Move to Opus 4.6 Now?

- Conclusion: Opus 4.6 as Your Next AI Workhorse

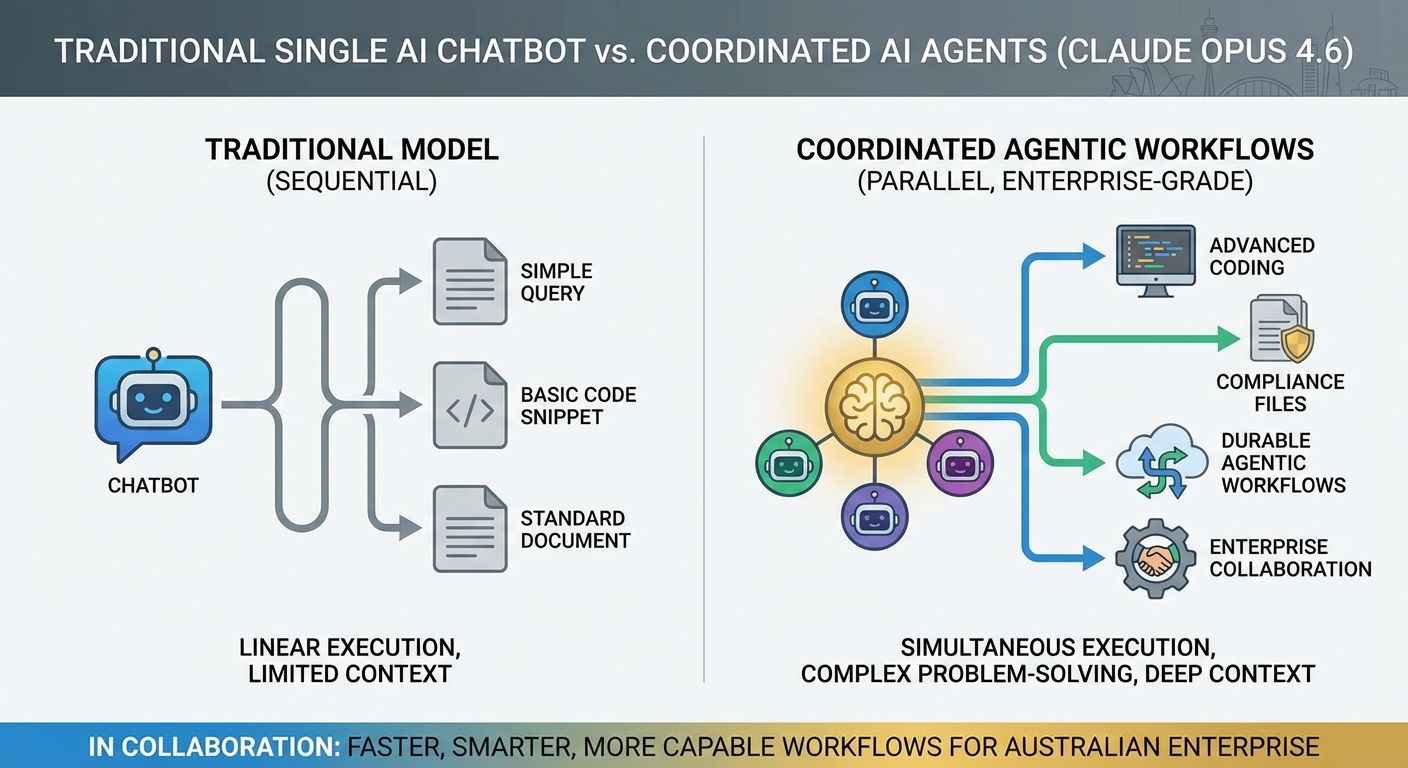

Claude Opus 4.6 is Anthropic’s most capable model yet, built for advanced coding, durable agentic workflows, and complex enterprise-grade work. It brings three big upgrades that matter in real teams.

-

Much longer context – 200K tokens by default, with access to 1M+ token inputs for select customers working with full codebases or large document sets.

-

Adaptive thinking – the model adjusts how “hard” it thinks using effort levels: low, medium, high (default), or max.

-

Agent-focused design – better at planning, long-running tasks, and working as part of agent teams.

Benchmarks back this up. Opus 4.6 briefly hit #1 on Terminal-Bench 2.0 for coding at launch and still leads on Humanity’s Last Exam for reasoning, clearly beating earlier Claude versions and strongly challenging other frontier models, as highlighted in independent reviews of Opus 4.6 versus Codex 5.3 and recent benchmark analyses..

In practice, this means faster debugging in unfamiliar codebases, stronger research support, and better long-form writing. For Australian businesses using Claude through API or Azure, it is now the best Claude choice for serious production work, especially when paired with a secure Australian AI assistant for everyday tasks that can be tailored to local compliance and privacy needs.

https://www.anthropic.com/news

Key Features: Long Context, Agents, and Fast Mode

Opus 4.6 is built for real workloads, not just demos. Here are the features that change how you build with it, especially when wired into specialised AI implementation services that understand production constraints.

-

Up to 1M token context with supported models (beta)

-

Handle huge codebases, legal bundles, or research sets in one shot, something Australian teams often combine with custom automation and AI models for audits or data-heavy reviews.

-

Standard use is priced at the base rate up to 200K tokens; above that, long-context requests move to a higher-priced tier with roughly double the per‑million token cost.

-

Great for audits, legacy systems, or compliance reviews.

-

-

Adaptive thinking controls

-

Use low/medium effort for quick chatbots and support.

-

Switch to high or max for deep analysis, planning, or hard bugs.

-

Helps balance speed, quality, and cost without changing models, especially when combined with careful model selection and routing strategies across different providers.

-

-

Agent Teams (beta)

-

Multiple Opus agents can split and parallelise tasks, mirroring the emerging “agent teams” capabilities described in Anthropic’s Opus 4.6 release and early coverage of collaborative workflows.

-

Most mature today in Claude Code for large coding work.

-

Concept extends to research, but that is still early.

-

-

Context compaction

-

Automatically summarises old messages in long chats.

-

Enables “infinite” agents that keep running over time.

-

Trades off some fine detail, so be careful with edge cases.

-

-

Up to 128K output tokens for long reports, docs, or code, plus a speed-focused Fast Mode that delivers responses up to 2.5x faster than standard Opus 4.6 at a higher per-token cost.

-

Ideal for real-time tools where latency really matters, similar to how lightweight models like O4-mini or O3-mini are deployed for low-latency use cases in performance-focused OpenAI comparisons.

These upgrades build on deep Microsoft 365 integrations, especially for Excel and PowerPoint via Microsoft Foundry, making Opus 4.6 suitable for everyday office workflows alongside heavy engineering use, and particularly powerful when orchestrated through professional AI integration services that understand both Office and Azure environments.

https://www.anthropic.com/news

Should You Move to Opus 4.6 Now?

Opus 4.6 clearly outperforms Opus 4.5 and Claude 3.5 Sonnet for coding, research, writing, and agents, with Anthropic emphasising its advances for enterprise workflows in Claude 4.6 release notes and broader model announcements. However, some experts argue that “clearly outperforms” may overstate how universal these gains are in day-to-day work. While Opus 4.6 posts strong numbers across most benchmarks, there are edge cases-like a slight dip on SWE-bench Verified-where it doesn’t strictly dominate Opus 4.5, and many teams won’t immediately notice the difference without retooling their workflows to exploit its new agentic and long-context strengths. Real-world performance also depends on factors beyond the base model, including tool integration, prompt design, latency, and cost, so for certain production systems the marginal uplift from 4.5 or even Claude 3.5 Sonnet may not yet justify an aggressive migration. In that sense, Opus 4.6 looks less like a blanket replacement and more like a high-upside upgrade that delivers the biggest ROI for organizations ready to redesign processes around its enterprise and agent capabilities. But there are trade-offs to weigh.

-

When it is a strong upgrade

-

You work with large codebases, legal packs, or multi-year data.

-

You need agents that run for long periods without losing the plot.

-

Your team relies on Excel, PowerPoint, and Azure for daily work.

-

-

Risks and limits to watch

-

Some advanced features like extended 1M context and Fast Mode are generally available, while Agent Teams and compaction are still in beta.

-

Behaviour or pricing may shift as Anthropic tunes these features.

-

High effort levels can slow simple tasks and increase cost.

-

-

Practical rollout tips

-

Pilot Opus 4.6 on one workflow first, like debugging or reporting, ideally within a sandboxed environment managed by an experienced Australian AI partner who understands governance.

-

Benchmark low vs high effort and Fast Mode for your own stack, and compare against alternatives like GPT‑5.2 and Gemini 3 Pro using existing cross-model evaluation guides.

-

Keep fallbacks to older models while beta features settle.

-

For teams in Australia, there are no AU-specific prices yet, but Anthropic’s latest models-including Claude 3.5 Sonnet on AWS Bedrock in the Asia Pacific (Sydney) Region and Claude Opus 4.6 on Azure, AWS Bedrock, and Google Cloud Vertex AI-are already accessible, so you can start testing now and grow into the advanced context and agent features over time, especially if you plug them into a locally hosted assistant that respects Australian data residency and integrates with your existing AI knowledge and content footprint.

https://www.anthropic.com/news

Conclusion: Opus 4.6 as Your Next AI Workhorse

Claude Opus 4.6 is built for serious coding, research, and office workflows, with long context, adaptive reasoning, and strong agent support. If you need AI that can stay on top of huge projects and still respond quickly, it is the Claude version to trial first, especially when woven into your broader AI services strategy and complemented with bespoke automation tuned to your stack and industry. We love it and are certain you will love it too.

Frequently Asked Questions

What is Claude Opus 4.6 and how is it different from earlier Claude versions?

Claude Opus 4.6 is Anthropic’s most advanced large language model, designed for complex coding, research, and enterprise workflows. Compared to earlier versions, it offers a much longer context window (200K tokens by default, with access to 1M+ in beta), adaptive thinking levels, and better support for long‑running, agent‑style workflows. It also scores higher on independent coding and reasoning benchmarks than previous Claude models. For production use, it’s currently the best Claude option for serious business applications.

What can I actually do with the 1 million token context in Claude Opus 4.6?

The 1M token context lets you load entire codebases, large legal bundles, or big research datasets into a single prompt. This is ideal for things like full‑system code reviews, compliance audits, document analysis, and cross‑document summarisation. Australian businesses often pair this with custom automation so the model can scan and annotate large archives in one workflow. Pricing is standard up to 200K tokens, with a higher per‑million token rate for the long‑context tier.

What does adaptive thinking mean in Claude Opus 4.6?

Adaptive thinking lets you control how much “effort” the model spends on a task, using levels like low, medium, high (default), and max. Lower effort is faster and cheaper, good for simple Q&A or support chats, while higher effort produces deeper reasoning, more robust planning, and better handling of tricky bugs or edge cases. This means you can tune speed, quality, and cost without switching models. LYFE AI can help you wire these effort levels into your workflows based on business rules.

Is Claude Opus 4.6 good for Australian businesses and local compliance needs?

Yes, Claude Opus 4.6 is well‑suited to Australian businesses that need privacy‑sensitive and compliance‑aware AI workflows. Its long context is particularly useful for handling local legal frameworks, internal policies, and large document sets in one go. When used via LYFE AI’s secure Australian AI assistant, deployments can be tailored to Australian data residency, governance, and sector‑specific regulations. This makes it a strong option for finance, healthcare, government, and professional services.

How does Claude Opus 4.6 compare to other frontier models like Codex 5.3?

Independent benchmarks show Claude Opus 4.6 briefly reached #1 on Terminal‑Bench 2.0 for coding and leads on Humanity’s Last Exam for reasoning. Reviews indicate it clearly outperforms earlier Claude versions and strongly challenges other top coding‑focused models such as Codex 5.3. In practice, users see faster debugging in unfamiliar codebases and stronger analytical performance on complex tasks. The choice between models often comes down to your stack, data requirements, and integration needs, which LYFE AI can help evaluate.

How can my company use Claude Opus 4.6 through LYFE AI?

You can access Claude Opus 4.6 via LYFE AI’s secure AI assistant platform or through bespoke AI implementation projects. LYFE AI designs and builds workflows such as document review pipelines, coding assistants, research copilots, and internal knowledge tools that sit on top of Opus 4.6. They also handle integration with your existing systems, access controls, monitoring, and optimisation of effort levels and context usage. This helps you get real business value without needing an in‑house AI team.

What are the main use cases for Claude Opus 4.6 in a business setting?

Common use cases include advanced code assistance, debugging across large or legacy codebases, compliance and audit reviews, and long‑form content or report generation. It’s also strong for research support, where you need to ingest and reason over large volumes of documents at once. With its agent‑focused design, it can power multi‑step workflows like data extraction, classification, and summarisation in one pipeline. LYFE AI helps tailor these use cases to your specific industry and data landscape.

How does pricing work for Claude Opus 4.6 long‑context requests?

Standard Opus 4.6 usage up to 200K tokens is billed at the base per‑million token rate. When you use long‑context inputs beyond 200K tokens (up to 1M in supported configurations), those requests move into a higher‑priced tier at roughly double the per‑million token cost. This structure allows teams to reserve 1M‑token context for heavy audits, codebase ingestion, or major reviews while keeping everyday requests cheaper. LYFE AI can help design prompts and workflows to keep token usage cost‑effective.

What does agent‑focused design in Claude Opus 4.6 actually mean for my workflows?

Agent‑focused design means Opus 4.6 is better at planning, breaking tasks into steps, and maintaining coherence over long‑running workflows. It can be used as part of an “agent team,” where different instances handle specialised subtasks like data gathering, analysis, and summarisation. This makes it more reliable for durable workflows such as end‑to‑end audits, multi‑phase research, or complex ticket resolution. LYFE AI can orchestrate these agents around your business processes and systems.

Can LYFE AI build custom AI models or automations on top of Claude Opus 4.6?

Yes, LYFE AI offers custom automation and AI model services that layer on top of Claude Opus 4.6. They can build specialised tools for audits, data‑heavy reviews, legacy system analysis, or internal knowledge search using Opus 4.6 as the core reasoning engine. This includes prompt engineering, retrieval‑augmented generation (RAG), workflow orchestration, and integration with your databases and apps. The result is a tailored solution rather than a generic chatbot.