Introduction: Why Token Enterprise Pricing Matters Now

Table of Contents

- Introduction: Why Token Enterprise Pricing Matters Now

- Token Costs Basics in Enterprise AI Platforms

- Per-Request Token Pricing vs Enterprise Scale

- Token Cost vs Total AI TCO and Budget Patterns

- Hybrid and Outcome-Based Token Pricing to Match Business Value

- Transparent Platforms, Spend Controls, and Managing Bill Shock

- Estimating Monthly Token Usage and Forecasting AI Workloads

- Practical Tips for Designing Token Enterprise Pricing Models

- Conclusion and Next Steps

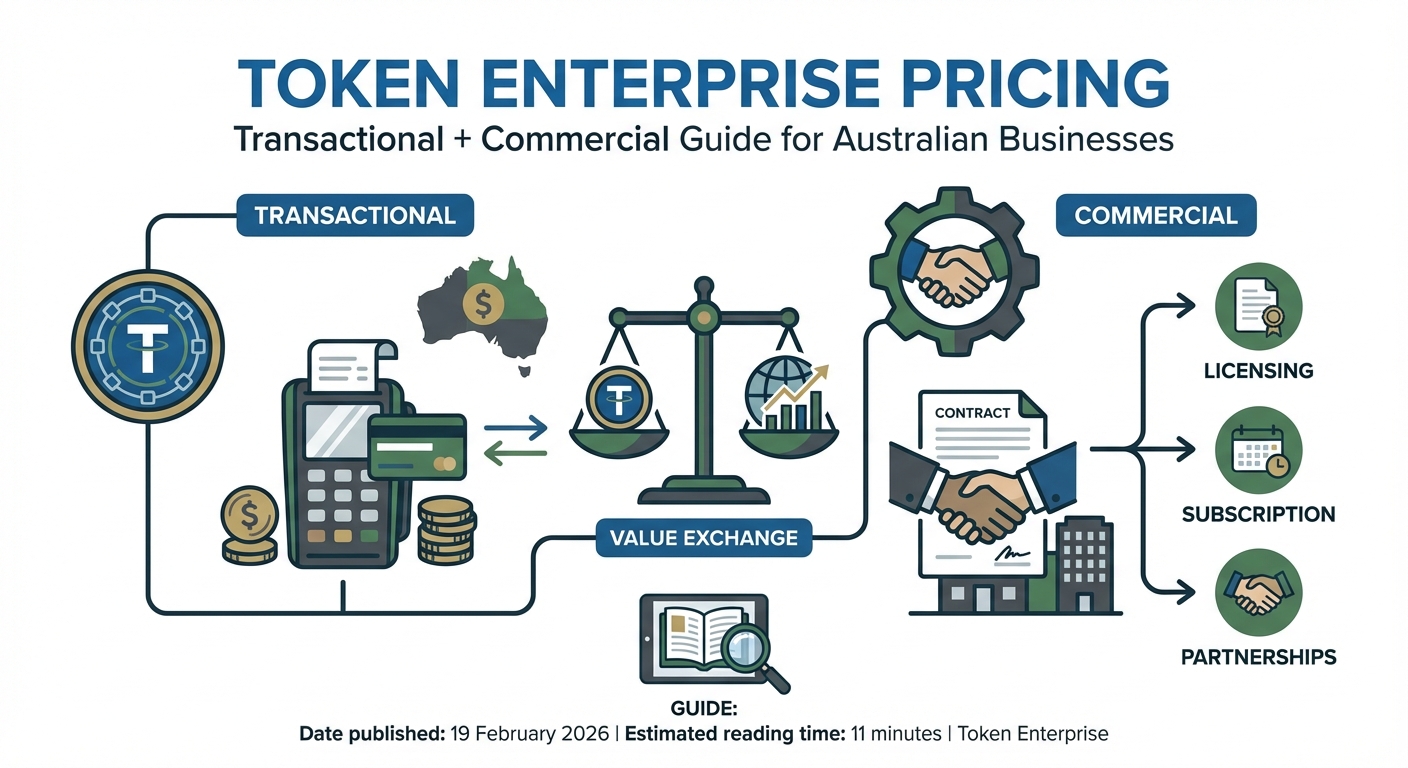

Token enterprise pricing for transactional and commercial AI is fast becoming a board-level topic in Australia. As more teams plug large language models into customer service, operations, and knowledge work, the bill for “tokens” can creep up quietly and then spike.

Most leaders did not grow up thinking in tokens. They think in headcount, projects, and service fees, not 1,000 tiny chunks of text. Yet in modern AI, tokens are the core billing unit and the main lever for usage-based pricing. Get them wrong, and either your margins vanish or adoption stalls.

This guide breaks down token costs in plain language, shows how pricing changes at scale, and explores hybrid and outcome-based models that line up better with business value. You also learn how to forecast usage, manage heavy users, and avoid nasty bill shock as you roll out AI across your organisation. For many Australian teams, a secure, production-ready starting point is a domestically hosted AI assistant that already bakes in sensible token policies and governance.

Token Costs Basics in Enterprise AI Platforms

To design good token enterprise pricing, you first need to understand what a token actually is. In most modern AI models, a token is a tiny slice of text – roughly four characters or about three quarters of a word on average. That means 1,000 tokens is around 750 English words, and 1 million tokens is roughly 750,000 words of combined input and output, which aligns with how major providers structure their API pricing schedules.

Enterprise AI platforms bill on both sides of the interaction. Tokens in your prompt, system instructions, and any uploaded content are counted as input tokens. Tokens in the model’s reply are output tokens. Vendors usually set different prices for each, often with input cheaper than output because generation is more compute-heavy. Specialist tools such as token cost calculators make these splits easier to quantify when you are modelling per-request economics, and internal teams will often reconcile those with usage and cost estimators to keep budgets on track.

Think of tokens like mobile data. Every message you send and every response you receive eats into the quota. Instead of megabytes, you are counting text units, but the logic is the same: more use, more spend. A typical enterprise API bill will show volumes such as “X million input tokens” and “Y million output tokens” for each model and month.

This structure also means your prompt design choices matter financially. Long system prompts, extensive context windows, and very verbose outputs all inflate token counts. Short, well-structured prompts with focused answers use fewer tokens and lower costs. For a commercial pricing strategy, you need at least a rough feel for average tokens per interaction before you can price users, seats, or outcomes with confidence – precisely the sort of analysis that underpins modern LLM pricing guides.

Per-Request Token Pricing vs Enterprise Scale

On a single request, token prices look almost trivial. Imagine a prompt of 500 tokens and a model response of 200 tokens. Suppose your AI provider charges US$0.01 per 1,000 input tokens and US$0.03 per 1,000 output tokens. The input cost would be 500 × 0.01 / 1,000 = US$0.005. The output cost would be 200 × 0.03 / 1,000 = US$0.006. Total: US$0.011 for that interaction, mirroring the worked examples you see in token-based pricing explainers.

Less than two cents per request feels like a rounding error. Pilots lull teams into a false sense of security; with only a handful of testers, you can run for weeks and barely notice the spend, especially if you are using free credits or a capped sandbox.

Things change when you scale. Now imagine 10,000 daily users, each making just one such request per day. Multiply 10,000 × US$0.011, and you are at about US$110 per day, or around US$3,300 per month. Still not huge, but this is for a simple interaction pattern, with modest prompts, on one model. Add more queries per user, more complex workflows, or higher-priced frontier models, and the curve bends upward quickly. This is exactly why many organisations lean on specialist token-pricing guidance before committing to enterprise-wide SLAs.

At full enterprise rollout – think tens of thousands of employees and multiple customer touchpoints – you are no longer looking at a side expense. Tokens become a material line item in your cloud and SaaS budgets. That is why transactional pricing that looks generous at small scale can become unprofitable or scary once heavy usage kicks in. Smart teams plan their commercial structures with the end state in mind, often leaning on structured model selection work such as Lyfe AI’s routing and cost guides for GPT‑5.2 tiers.

Token Cost vs Total AI TCO and Budget Patterns

It is easy to obsess over token prices because they are the most visible meter ticking in real time. But for Australian enterprises, token spend is usually only a single-digit percentage of the total cost of ownership for an AI initiative, much like the raw compute line in a cloud project. Your biggest expenses sit elsewhere, a pattern echoed in broader AI pricing model research.

Staff costs dominate. Internal engineering, product, and data teams absorb a large share of the budget as they build integrations, tune prompts, and manage ongoing improvements. Then you have integration work into internal systems, security controls, single sign-on, and data governance. None of that is free, and all of it takes time, especially in larger or regulated organisations.

There is also change management and training. Helping thousands of staff use an AI assistant correctly, updating process manuals, and adjusting performance measures can easily outweigh token spend in the first year. Add infrastructure such as vector databases, orchestration layers, and observability tools to monitor quality and latency, and your overall picture gets even more complex.

As adoption expands, budget patterns follow a rough curve. In pilots and proofs of concept, token usage costs are almost negligible. A single department rollout – say 1,000 Australian users sending 10 queries a day at roughly 1,000 tokens per interaction on a relatively cheap model – would consume around 10 million tokens per day. At a blended cost of US$2 per million tokens, that is about US$20 per day, or roughly US$600 per month, still modest but finally visible.

The big jump arrives with whole-of-business rollout using top-tier frontier models and no routing or optimisation. Picture 20,000 staff members, each doing 20 interactions per day at 2,000 tokens each, on a higher-priced model. Now you are into billions of tokens per month, and raw usage can drive serious, ongoing operating expenditure. If you are reselling the AI capability to customers, this directly squeezes your gross margins unless your pricing is carefully tuned, which is why many organisations start exploring custom model routing and automation setups to tame those curves.

Finally, compliance and security reviews under the Australian Privacy Act and sector rules (for example, in finance or health) can stretch timelines and budgets. All of this means that while token pricing needs to be thought through, it should sit inside a broader commercial view focused on turning usage into clear business value, the sort of question a partner like Lyfe AI, which focuses on secure, value-aligned AI deployments, is set up to help answer.

Hybrid and Outcome-Based Token Pricing to Match Business Value

Pure pay-per-token pricing is simple but often misaligned with how customers think about value. Many enterprises in Australia do not want to talk in millions of tokens; they want to talk in resolved tickets, completed workflows, or uplift in customer satisfaction. That is where hybrid and outcome-based models are starting to play a bigger role. However, some experts argue that completely dismissing technical metrics like tokens oversimplifies how sophisticated Australian enterprises actually buy and govern AI. While business leaders absolutely care about outcomes like resolved tickets and higher CSAT, procurement, technology, and risk teams still need transparent, auditable input-based measures to manage spend, capacity planning, and compliance. In practice, many larger organisations are moving toward a blended conversation: value is framed in business terms, but underpinned by clear technical usage metrics that make it possible to benchmark vendors, optimise workloads, and avoid bill shock. In that sense, “millions of tokens” may not be the language of the boardroom, but it remains an important part of the vocabulary for the teams responsible for safely scaling AI in production.

A common pattern is a fixed base subscription that buys you platform access or a minimum capacity, plus variable components tied either to usage or to an outcome metric. For example, a customer service AI could be priced as a monthly platform fee plus a charge per resolved conversation, with an agreed quality bar. Tokens remain the internal meter, but they are abstracted away from the buyer – an approach that fits neatly with token-based revenue-sharing and credit models described in broader SaaS pricing literature.

Some vendors peg pricing to specific business KPIs such as task completion rate, average handling time, or net promoter score improvements. In effect, the vendor and customer share both upside and risk. The customer gets more predictability on baseline spend, while the vendor has strong incentives to keep improving accuracy and reliability rather than just driving more token volume.

The trade-off is complexity. Outcome-based models require sharper service level agreements, clearer definitions of what counts as a “completed” event, and better measurement frameworks. You need to track not just calls to the model, but whether those calls delivered the promised result. For Australian organisations using AI in regulated contexts, that measurement layer is also essential for audit and assurance, and is often paired with specialised governance and observability services so commercial commitments match technical reality.

Transparent Platforms, Spend Controls, and Managing Bill Shock

As token-based usage grows, one nasty surprise can derail executive support: bill shock. Often it is not the average user who causes trouble but a small cluster of heavy users or hungry integrations that suddenly drive huge token volumes. A few power users experimenting with long prompts or batch jobs can shift the spend curve for the whole month.

To avoid this, enterprises should favour AI platforms that provide fine-grained visibility into token consumption. Helpful features include real-time dashboards, model-level and user-level breakdowns, and clear export options to feed finance and BI tools. You also want spend caps, rate limits, and alerting thresholds that can be set by team, environment, or use case rather than just at the top account level – capabilities that are built into many secure Australian AI assistant platforms from day one.

Another useful pattern is configurable packages or fixed bundles tuned to different business units. A contact centre may need a high-volume, low-cost bundle with strict per-agent caps, while a small data science team might run on a more flexible, higher-priced frontier model bundle. Fixed packages give budget owners more certainty and make it easier to recharge costs internally.

Finally, smart pricing design for commercial offerings should bake in heavy-user behaviour. Tiered pricing, overage charges that reflect actual costs, and fair-use policies can all help. Aligning usage allowances with measurable business value, not just raw technical consumption, keeps conversations positive: instead of “you used too many tokens,” you can say “we helped you resolve more cases than planned; here is how the economics shake out.” Teams that operate this way almost always lean on a clear set of transparent terms and conditions to keep those conversations predictable.

Estimating Monthly Token Usage and Forecasting AI Workloads

Building a robust commercial model for token enterprise pricing starts with a decent forecast. You do not need perfect precision, but you do need a grounded estimate so your margins and budgets are not guesswork. Most organisations follow a three-step approach to get there.

First, measure average tokens per interaction in your pilot. That means counting both prompt and response tokens. Break it down by use case if you can: customer chat, internal search, document drafting, and so on. Even a sample of a few hundred interactions per use case gives you a decent benchmark – and you can validate these numbers against tools like AI token cost calculators before locking in pricing.

Second, multiply that by user volume and interaction frequency over a realistic period. If an internal assistant averages 800 tokens per interaction, with 5,000 monthly active users doing 15 interactions per day, you can map out daily, weekly, and monthly token needs. Repeat for each major model and workflow, and factor in seasonality, such as end-of-financial-year spikes.

Third, consider the impact of model choice and extra features. Switching from a mid-tier model to a frontier model often changes both price per token and behaviour: people may submit longer prompts or expect richer outputs. Embeddings, routing logic, and tools like code execution can also add silent token overhead. Many Australian teams now run controlled pilots tied to outcome metrics (for example, reduced resolution time in support) to refine these forecasts before committing to large, multi-year commitments, often comparing options like OpenAI O4‑mini vs O3‑mini or GPT‑5.2 vs Gemini 3 Pro as part of that exercise.

A key metric to watch is gross margin per token or per business event. If you sell an AI-powered feature, ask: after paying for tokens and related infra, how much margin is left per resolved case or per generated document? Track that monthly. It will tell you early if your commercial model is sustainable as usage ramps, and it will also surface when you need more advanced professional optimisation services around routing, caching, or fine-tuning.

Practical Tips for Designing Token Enterprise Pricing Models

Pulling this together into a practical plan is where most teams get stuck. The good news is you can take a few clear steps to design transactional and commercial token pricing that actually works in the real world and not just in a spreadsheet.

Start by mapping use cases to value. For each major AI feature, ask what business outcome it drives: faster resolution, higher satisfaction, reduced manual effort, or something else. Use that to decide whether a simple usage-based model is enough, or whether you should move toward a hybrid or outcome-based structure that charges per resolved ticket, per verified draft, or per completed workflow – with the underlying token mechanics handled by specialist AI service providers rather than every product team reinventing the wheel.

Next, set internal guardrails around model choice and prompt design. Encourage teams to use smaller, cheaper models where possible, and reserve frontier models for tasks that truly need them. Provide prompt templates to avoid bloated instructions that waste tokens without adding value. Documentation and light training here can have a bigger impact on your token bill than tiny price negotiations.

Finally, bake feedback loops into your pricing strategy. Review token usage, unit economics, and business outcomes monthly at first, then quarterly. Be willing to adjust bundles, caps, or model mix as behaviour changes. AI usage is not static; as staff and customers discover new patterns, your pricing and controls should keep evolving alongside them. That mindset – “iterate, do not set and forget” – is what separates sustainable AI businesses from those blindsided by their own success, and it is exactly how mature AI programmes document and refine their operating playbooks over time.

Conclusion and Next Steps

Token enterprise pricing for transactional and commercial AI can look mysterious at first, but under the hood it is just metered consumption, like any other utility. Tokens drive costs, yet they sit inside a much wider picture of engineering effort, integration work, and change across your organisation.

If you understand how tokens behave at scale, learn the common budget patterns, and pair usage with clear business outcomes, you can design pricing that feels fair to both sides and holds up as adoption grows. Take the time now to measure, forecast, and experiment with hybrid models before you commit to a full, whole-of-business rollout.

The next step is straightforward: run a focused pilot with proper tracking, then use that data to shape your commercial model. Get the numbers, test your assumptions, and only then lock in long-term deals. Your future finance team will thank you – and if you prefer not to go it alone, a partner like Lyfe AI can help you align pricing, architecture, and governance from the very first experiment.

Frequently Asked Questions

What is token enterprise pricing and why does it matter for Australian businesses?

Token enterprise pricing is a usage-based way of charging for AI services, where costs are calculated on the number of tokens processed (tiny chunks of text) rather than seats or flat licenses. It matters for Australian businesses because as AI is embedded into customer service, operations, and knowledge work, token usage can scale quickly and create unexpected costs if not modelled correctly. Getting the pricing model right helps protect margins while still encouraging adoption across teams.

How much is 1,000 or 1 million tokens in real world text terms?

In most modern language models, a token is roughly four characters or about three-quarters of a word on average. That means 1,000 tokens is about 750 English words, and 1 million tokens is roughly 750,000 words of combined input and output. Thinking in word counts helps finance and operations teams translate technical token volumes into something closer to document pages, emails, or chat transcripts.

How do token costs work in enterprise AI platforms?

Enterprise AI platforms typically bill for both input and output tokens in each interaction. Input tokens include your prompts, system instructions, and any uploaded content, while output tokens are the words the model generates in response. Vendors often price input and output differently, with output usually more expensive because generation is more compute-intensive. Understanding that both sides are billable is essential for accurate cost forecasting.

How can I estimate our monthly AI token spend in an enterprise setting?

Start by mapping typical use cases, such as customer support chats or document summarisation, and estimate the average tokens per interaction (prompt plus response). Multiply that by the expected number of interactions per day and days per month, then apply your provider’s per‑1,000‑token rates for input and output. For more accuracy, run a pilot with real users for a few weeks and export token usage metrics from your platform to build a proper forecast model.

What are the main differences between transactional and commercial token pricing models?

Transactional pricing charges you directly per token or per request, which is transparent but can be volatile when usage spikes. Commercial or enterprise pricing often wraps token costs into tiers, commitments, or bundled solutions, trading some per‑request visibility for discounts, SLAs, and governance features. Many Australian organisations adopt a hybrid, using transactional pricing in pilots and negotiated commercial terms once usage stabilises.

How can we avoid AI token bill shock when rolling out across our organisation?

Set hard and soft usage limits at the workspace, team, or user level, and monitor tokens with dashboards from day one. Standardise prompt templates, cap maximum response lengths, and restrict very large file processing to approved workflows. Periodically review high‑usage users and use cases, and consider moving predictable workloads to a domestic enterprise provider like LYFE AI that can offer clearer commercial caps and governance.

What is a fair way to pass token costs on to our own customers?

Many businesses avoid line‑item token charges and instead use simple units that map to value, such as per resolved ticket, per document processed, or per active AI user. Behind the scenes, you can map each unit to an expected token range and build in a buffer to protect your margins. For heavy or unpredictable users, consider volume tiers or overage rates that kick in after a certain level of token consumption.

How does using a domestically hosted AI assistant like LYFE AI affect token pricing and governance?

A domestically hosted AI assistant can bundle token usage into predictable commercial plans, rather than exposing every API call directly. This often means pre‑defined token policies, logging, and access controls that reduce the risk of runaway usage or data residency issues. For Australian organisations, keeping data and processing onshore also simplifies compliance discussions with legal, risk, and procurement teams.

What is the best way to compare token pricing between different AI providers?

Normalise everything to a common unit, such as cost per 1,000 tokens for a typical workload, and include both input and output rates. Then factor in model quality, latency, data‑hosting location, support, and governance features, because a cheaper raw token rate can be offset by higher re‑work or compliance overhead. Running a small benchmark using your real prompts and documents is the most reliable way to compare effective cost per outcome, not just list pricing.

Can we use outcome-based pricing instead of pure token-based pricing for our AI products?

Yes, many organisations abstract raw tokens into outcome-based models like per qualified lead, per resolved support case, or per report generated. Internally, you still track tokens to manage costs and negotiate with your AI platform, but customers see a metric that aligns with business value. LYFE AI’s enterprise setups can help design these hybrids by combining token telemetry with usage analytics and commercial rules.