Table of Contents

- Introduction: why hallucinations matter more than hype

- High accuracy vs low hallucination: breaking the “best model” myth

- Customer support assistants in Australia: choosing the safest model

- Document drafting: emails, policies, contracts and marketing

- Citation reliability and “fake but well‑cited” answers

- The AU regulatory environment: hallucinations as a compliance risk

- Claude, GPT‑5, Gemini and local models: strengths, limits, and AU‑specific RAG

- Practical implementation tips for Australian organisations

- Conclusion and next steps

Introduction: why hallucinations matter more than hype

Everyone wants the “best” AI model. The fastest, smartest, most accurate. But when you’re running an Australian bank, health provider, or even a scrappy startup, one thing matters more than hype: hallucinations. When an AI confidently makes things up, that’s not just annoying. It can drift into legal trouble, customer harm, or a full‑blown PR mess.

The tricky part? The model with the highest benchmark score is not always the one with the lowest hallucination rate. A system can ace test questions and still invent policies, fees, or health advice out of thin air. That means “which model is best?” is the wrong question. The right one is: “which model is safest for this specific job, in the Australian context?”

We’ll unpack how GPT‑5‑class models, Claude 4.x, Gemini 3, and modern local models behave when it comes to hallucinations, with a focus on two high‑impact areas for AU organisations: customer support assistants and document drafting. We’ll also look at citation reliability—the scary “fake but well‑cited” answers—and why the Australian regulatory landscape turns hallucinations into a governance and audit topic, not just a UX bug.

By the end, you’ll have a practical view of which model to use where, how to ground it with AU‑specific retrieval‑augmented generation (RAG), and how to keep regulators, boards, and customers onside as you scale AI across your organisation—with options ranging from a secure Australian AI assistant for everyday tasks through to deeper enterprise integrations.

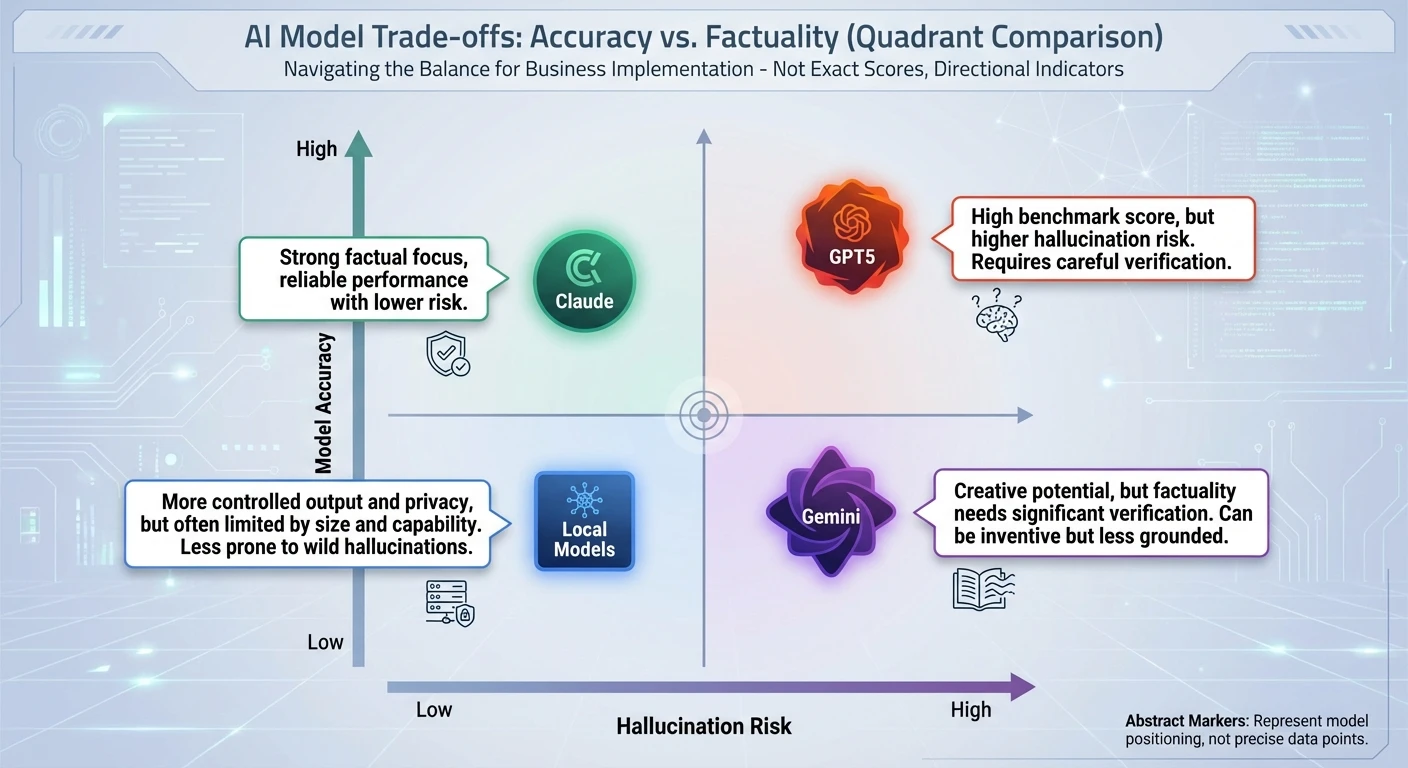

High accuracy vs low hallucination: breaking the “best model” myth

High accuracy does not guarantee low hallucination. Benchmark data shows this clearly. Gemini 3 Pro now hits over 70% measured accuracy on some tough factual tasks like SimpleQA Verified. Impressive on paper. But some Gemini variants still hallucinate more than 87% of the time in certain tests. That’s not a rounding error. Impressive on paper. That’s a chasm, and it mirrors what broader LLM hallucination research has observed across frontier models.

On the flip side, Anthropic’s Claude 4.5 family actually leads the pack on hallucination control in the same research, with Claude 4.5 Haiku recording about 26% hallucination – one of the lowest rates among all evaluated models. Its accuracy is solid but not always top of the leaderboard. GPT‑5.1 (High) sits roughly in the middle for both accuracy and hallucination rates. So the old assumption – “pick the model with the best accuracy score and you’ll be fine” – just does not hold up.

For Australian businesses, this changes the buying decision. Instead of hunting for a single “best” model, you need a portfolio mindset. Pick different models for different use cases, based on their hallucination profile, refusal behaviour, and how they handle uncertainty. A model that declines to answer in grey areas can be more valuable than one that tries its best and improvises the law.

Your evaluation process has to go beyond standard AI benchmarks. Test each model on AU‑specific regulations, local products, and your internal policies. Ask it about your fees, your complaint process, your privacy policy. See where it invents detail. That behaviour, not a global leaderboard, should guide which model you put in front of customers or regulators-and which you embed in more specialised automation and custom AI workflows.

Customer support assistants in Australia: choosing the safest model

Customer support is usually the first place Australian organisations want to deploy generative AI. It’s visible, measurable, and closely tied to cost. But it’s also where hallucinations can bite hardest. A made‑up refund rule is not just annoying; it can become “misleading or deceptive conduct” under Australian Consumer Law if left unchecked.

For external, customer‑facing support – especially in banking, health, and government – low hallucination and conservative refusals matter more than peak creativity. Research data points to Claude 4.5 Haiku and Sonnet as front‑runners here. They deliver competitive hallucination rates among leading models and tend to be more cautious when they are uncertain. That makes them solid candidates for default support assistants, especially when paired with RAG from vetted Australian knowledge bases and clear human‑in‑the‑loop escalation paths. That said, if minimizing hallucinations is your single top priority, the latest Relum study (Dec 2025) is worth noting: Grok currently leads their benchmark with the lowest hallucination rate at 8% across 10 major models, compared with 35% for ChatGPT and 38% for Gemini in the same eval. In other words, Haiku and Sonnet are still very viable front‑runners for safety‑critical support flows—but Grok now sets the bar on raw hallucination performance in this particular study.

GPT‑4o and GPT‑5.x also look attractive for high‑stakes support. Independent testing has found GPT‑4o to be the most reliable option for high‑stakes work overall, especially when combined with tools like search, RAG, and code execution. In practice, that might look like a GPT‑5. However, some experts argue that it’s premature to crown GPT‑4o as the most reliable option for high‑stakes work across the board. Independent evaluations of GPT‑4‑class models in domains like medicine and clinical decision support still highlight non‑trivial reasoning gaps, hallucinations, and failure modes that would be unacceptable without tight human oversight. These studies don’t show GPT‑4o clearly outperforming every other frontier model, and in at least one narrow benchmark it only came out ahead of o3‑pro rather than the broader field. From this angle, GPT‑4o looks less like a universally ‘most reliable’ solution and more like a strong candidate that still needs careful guardrails, rigorous validation, and domain‑specific workflows before being trusted in genuinely high‑stakes environments.

Gemini is a more nuanced story. It can be very accurate, but some variants show high hallucination rates. That combination – sharp but sometimes overconfident – makes it more suitable for internal or experimental flows. A Gemini‑powered internal helper for staff that drafts replies or suggests next actions can work well, as long as a human reviews the final answer before it reaches a customer. For unsupervised, customer‑facing channels in regulated sectors, it’s safer to stick with models that are proven to say “I don’t know” more often.

Document drafting: emails, policies, contracts and marketing

Not every use case is life‑or‑death. Sometimes you just need a first draft of an email, campaign copy, or an internal memo. Other times, you’re working on a contract clause that may face a judge, or a disclosure a regulator will read line by line. The stakes change. Your model choice should change with them.

For creative marketing content – blogs, social posts, ad variations – Gemini and GPT‑5.x are excellent engines for ideas and volume. They can spin out many options fast, play with tone, and suggest angles you might not consider. The key safeguard is simple: treat any factual statements as unverified. Stats, case studies, and any reference to Australian law should be checked manually or with a separate, grounded workflow before they go live.

For high‑stakes drafting – internal policies, contracts, financial product disclosure statements, health consent forms – you want models that balance accuracy, low hallucination, and cautious phrasing. Claude 4.5 Opus and Sonnet sit in that sweet spot, as does GPT‑5.x when used with tools. They tend to hedge more, call out uncertainty, and follow instructions about risk language (“do not give legal advice”, “cite sources”, “flag any uncertainty”) more reliably, which aligns with broader evaluations comparing GPT‑5.x with peers like Gemini and Claude in documents such as 2025 AI model comparisons.

One thing remains constant: no general‑purpose LLM should be treated as an authoritative legal source. For Australian‑specific legal or compliance content, run RAG against up‑to‑date legislation and regulator guidance-think legislation.gov.au, ASIC, APRA, ACCC, ATO, and relevant state bodies-and then have a qualified human, usually legal or risk, review the final draft. AI is a powerful drafting assistant, not a lawyer or compliance officer, no matter what the marketing claims.

Citation reliability and “fake but well‑cited” answers

As models get better at adding citations, a new risk appears: answers that look rock‑solid because they are filled with links, but the links don’t actually support the claims. This is the “fake but well‑cited” hallucination, and it might be more dangerous than an obviously wrong answer with no footnotes.

Independent testing by SHIFT ASIA compared ChatGPT (GPT‑4o), Gemini, Perplexity, Copilot, and Claude on hallucination rates, accuracy, bias, and citation reliability. The results show large differences in how often citations are real and actually relevant. Gemini 2.0 performed best overall for research quality, while GPT‑4o came out as most reliable for high‑stakes work. Perplexity was flagged as particularly risky because it often used real sources but misinterpreted them or bolted unrelated citations onto its claims patterns that echo broader findings in studies like the Voronoi analysis of persistent hallucinations.

Temporal and geographic awareness also vary. Some systems are better at recent events; others have gaps after a certain date. Many lean toward US or EU content when the user does not clearly specify “Australia”. So you can end up with neat‑looking answers that cite real, high‑quality sources – but from the wrong jurisdiction or time frame. In regulated, audited domains like finance, healthcare, or government communication, that’s a serious problem.

For Australian organisations, the lesson is to test and monitor citation behaviour, not just answer correctness. In RAG setups, log which documents were retrieved and whether the model actually used them. Make it easy for reviewers to click through and check. And, where possible, instruct models to say when the retrieved documents don’t fully support the user’s question, instead of forcing an answer that papers over gaps with confident noise – something that tools like hallucination benchmarks can help you quantify up‑front.

The AU regulatory environment: hallucinations as a compliance risk

In Australia, AI hallucinations are not just a “bad chatbot experience”. They can quickly become compliance incidents. The legal backdrop is dense: the Privacy Act 1988 and Australian Privacy Principles (APPs) overseen by the OAIC, consumer law enforced by the ACCC, banking and financial regulation under ASIC and APRA, plus bodies like AHPRA, TGA, and various state regulators.

Picture a customer‑facing AI that confidently quotes the wrong cooling‑off period on a financial product, or misstates a patient’s rights in a telehealth chat. That’s not simply a usability bug. It can amount to misleading or deceptive conduct, inaccurate disclosure, or even unsafe health advice. The fact that “the AI did it” is not a shield; regulators will look at your governance, testing, and controls.

Even the best models still hallucinate at non‑trivial rates in independent tests, often well into the double digits depending on task and benchmark. Recent evaluations put leading systems anywhere from low single‑digit error rates on tightly grounded tasks to well over 25–30% on open‑ended or reasoning‑heavy workloads. For example, Voronoiapp reports Claude 4.5 Haiku at a 26% hallucination rate, with other cutting‑edge models such as Claude 4.5 Sonnet and GPT‑5.1 crossing the 45–50% mark in tests that count incorrect answers rather than refusals. AIMultiple’s benchmarks show the latest models still above 15% on statement analysis, while Scott Graffius highlights top models at roughly 0.7–1.5% on grounded tasks but over 33% for reasoning‑focused models like OpenAI o3 on PersonQA/SimpleQA. Retrieval‑centric leaderboards such as Vectara’s report much lower hallucination rates (for example, around 5–6% for models like GPT‑4.1) when tasks are tightly constrained by source documents.

The throughline is clear: if you drop these systems into advisory workflows without safeguards, you’re almost guaranteeing a steady stream of incorrect outputs. For boards and risk teams, that moves hallucination from a narrow IT concern to a topic for governance committees, audits, and risk registers, especially as more analyses (including security‑oriented reviews of agentic hallucinations) show just how stubborn these failure modes remain. If you use them in advisory workflows without safeguards, you are almost guaranteeing a stream of incorrect outputs. For boards and risk teams, this shifts hallucination from an IT issue to a topic for governance committees, audits, and risk registers, especially as more analyses (for example, security‑oriented reviews of agentic hallucinations) highlight just how stubborn these behaviours remain.

Australian organisations should treat model selection and hallucination controls like any other material risk. Document why you chose a model for a given workflow, how you tested its behaviour on AU‑specific scenarios, what RAG or tool integrations you use to keep it grounded, and where humans step in before the output reaches a customer or regulator. Over time, this documentation will likely become part of standard audit packs and regulatory engagement, much like cybersecurity controls today.

Claude, GPT‑5, Gemini and local models: strengths, limits, and AU‑specific RAG

Each leading model family brings a different balance of capability and risk. Understanding their “personality” helps you place them in the right roles instead of forcing one engine to do everything.

Claude 4.x / 4.5 focuses heavily on alignment and refusal behaviour. Its safety filters are tuned for finance, law, and medicine, and it is particularly good at handling instructions about uncertainty. When an issue is unclear, it often declines to answer or adds strong caveats instead of guessing. In RAG setups, it tends to cite cautiously and stick to retrieved content. This makes Claude a strong default for high‑risk domains where a false positives are wrong but a confident answer is more dangerous than admitted ignorance. Think legal review assistants, compliance support, or early‑stage medical triage helpers within regulated Australian industries.

GPT‑5.x, on the other hand, shines in tool‑augmented workflows. It is positioned as significantly less prone to hallucination in reasoning compared with GPT‑4, especially when it can use tools like code execution, retrieval, and workflow orchestration. In those setups, tools provide external checks on facts, calculations, and data. However, when run as a pure chat model with no grounding, GPT‑5.x can still produce plausible but wrong multi‑step arguments. For enterprises, the safest way to use it is as a reasoning and orchestration layer that always leans on RAG, search, or internal APIs for facts – a pattern explored in guides such as the GPT‑5.2 model selection and routing guide.

Gemini 3 (Pro / 3.x) brings another angle: strong multimodal skills and plain‑English reasoning. It can read scanned PDFs, invoices, screenshots, and forms, then explain them clearly. In Australian contexts, that makes it valuable for document understanding tasks like reading government forms, interpreting utility bills, or processing handwritten notes. When the source document is in front of the model, hallucination risk drops because it doesn’t have to invent details – it can quote and summarise. The key is to constrain Gemini to “explain what’s in this document” rather than “answer anything about the world”, and to choose between it and GPT‑5‑class options using resources like comparative feature and pricing analyses.

Local and open models – such as DeepSeek‑V3.2 or Llama‑class systems -have improved rapidly, but they still show higher and more variable hallucination rates than the best proprietary models, especially outside their training comfort zone. Their sweet spot is narrow, well‑defined tasks with strong RAG behind them. For Australian organisations that need on‑prem or data‑sovereign deployments, local models can work for internal policy Q&A, a single‑product support flow, or a specific knowledge base. The catch is that you should treat them as less reliable for novel or open‑ended questions. Without tight scoping and good AU‑specific corpora, they will happily guess.

Across all these models, one pattern repeats: if you don’t ground them in Australian data, they will hallucinate foreign laws, policies, and norms. To avoid that, design RAG pipelines that pull content from AU‑specific sources such as legislation.gov.au, ASIC, APRA, ACCC, ATO, NHMRC, RACGP, TGA, state health departments, and your own internal policies. Make those sources the “single source of truth” the model must lean on. Without this grounding, even very advanced models drift back to US or UK defaults that look reasonable but are jurisdictionally wrong – an issue that model comparison studies and hallucination benchmarks are beginning to quantify more explicitly.

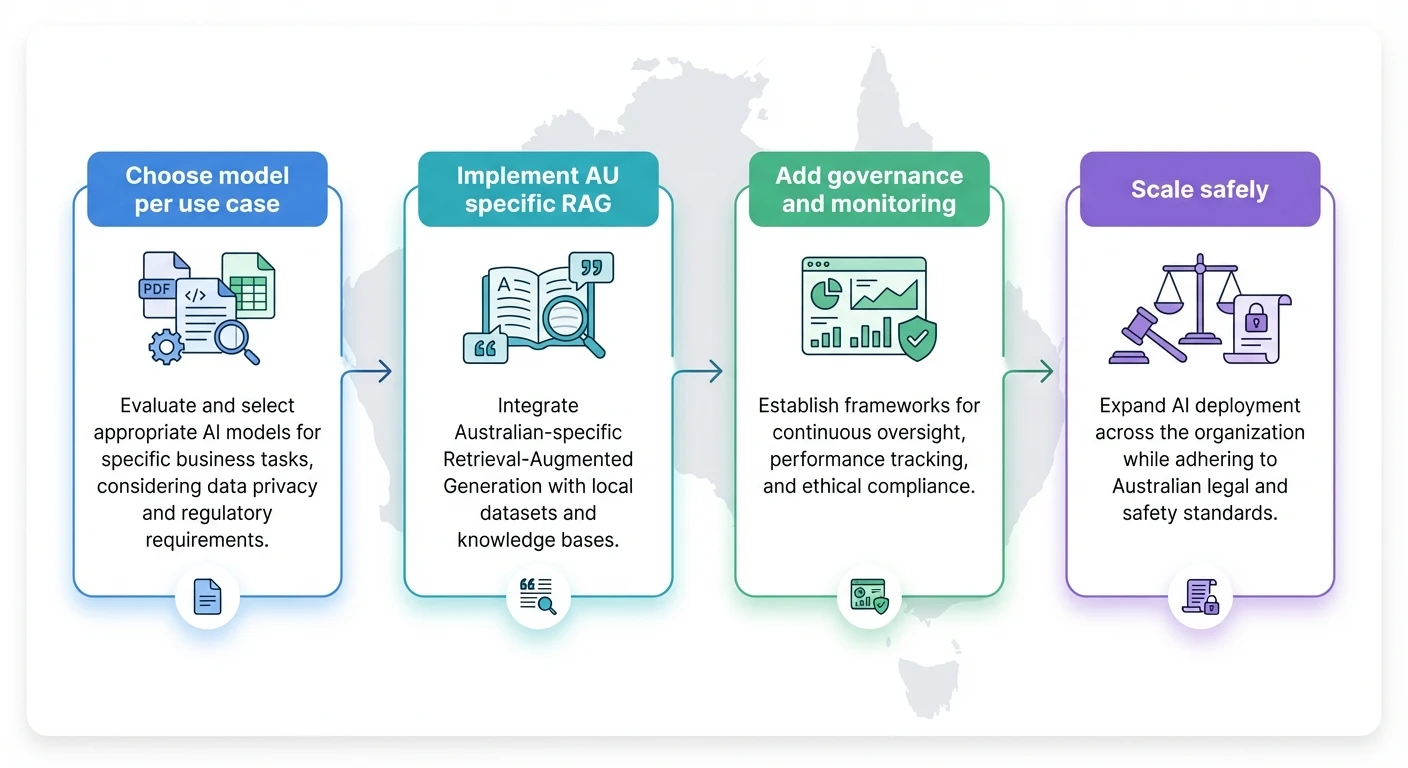

Practical implementation tips for Australian organisations

Turning all this into a real deployment plan can feel daunting. The good news is you can start small and still be rigorous.

First, map your use cases by risk level. For each workflow – customer support, marketing content, internal advisory, document drafting – ask: what happens if the AI is wrong? Does someone lose money? Does it misstate the law? Or is it just a slightly off email draft that a person will fix anyway? Use that map to decide which models can be used where and how much human review is needed, and match those decisions to the right mix of implementation and advisory services.

Second, design and run hallucination tests before go‑live. Build a test set of questions based on your own products, your fees, your policies, and relevant AU regulations. Run those through several models and record how often they make things up, how they handle uncertainty, and whether they insert foreign jurisdiction content. Repeat the tests after any major model update, because behaviour can change overnight- especially when you swap between options like GPT‑5.2 Instant and Thinking tiers, as discussed in model routing guides.

Third, invest in AU‑specific RAG corpora and retrieval. Curate a clean set of authoritative documents—legislation, regulator guidance, internal manuals, product terms—and index them with a vector database or similar system. Configure your assistant so that for high‑risk questions it must retrieve and cite from those sources before answering. Make “no answer” a valid outcome if the right document is not found.

Fourth, bake human‑in‑the‑loop review into high‑stakes flows. You might allow the AI to auto‑send responses to low‑risk FAQs, but route anything involving legal rights, health, or financial advice to a human queue with the AI’s draft attached. Over time, measure how often humans change the AI’s answer and use that to refine prompts, models, or your RAG setup – with help from professional AI implementation services where needed.

Finally, document everything. Record which models you use in which workflows, what guardrails you’ve added, how you monitor hallucinations, and how you handle incidents when they occur. Treat that documentation as living material for boards, auditors, and regulators. It shows that you understand the risk and are managing it with intent, not just throwing the latest model at every problem and hoping for the best—something that becomes even more important as you scale to enterprise‑wide deployments governed by clear terms and internal AI use policies.

Conclusion and next steps

AI model hype moves fast, but the core question for Australian organisations stays the same: can you trust this system in front of your customers, regulators, and staff? High accuracy alone doesn’t answer that. You need to understand how often each model hallucinates, how it behaves under uncertainty, and how well you’ve grounded it in Australian law, policy, and context.

Claude 4.x, GPT‑5.x, Gemini 3, and modern local models all have a place. The safest path is not picking a single “winner”, but building a small stable of models, each matched to the right job and wrapped in RAG, controls, and human review. If you’d like help designing that stack – choosing models, building AU‑specific corpora, or setting up hallucination testing – reach out to LYFE AI. We can work with your risk, legal, and technology teams to turn AI from a headline experiment into a governed, production‑ready capability that stands up to both customers and regulators, starting with a secure Australian AI assistant and scaling through bespoke automation and specialist advisory support.

© 2026 LYFE AI. All rights reserved. Sitemap | Lyfe AI

Frequently Asked Questions

What is an AI hallucination and why is it a problem for Australian businesses?

An AI hallucination is when a model confidently generates information that isn’t true or isn’t supported by its sources. In Australia, this can create real risks: giving customers wrong advice about fees or policies, mis‑stating regulations, or fabricating medical or legal information. That can quickly turn into complaints, regulatory scrutiny, or reputational damage for banks, insurers, healthcare providers and government agencies.

Which AI model has the lowest hallucination rate for Australian use cases?

There is no single “best” low‑hallucination model for every Australian use case. GPT‑5‑class, Claude 4.x and Gemini 3 all perform strongly on benchmarks, but their hallucination behaviour can differ by domain (e.g. finance vs health) and by task (customer support vs drafting policies). The safest approach is to test multiple models against your own AU‑specific knowledge base using realistic prompts, then combine the best model with retrieval‑augmented generation (RAG) and guardrails rather than relying on benchmarks alone.

How can Australian companies reduce AI hallucinations in customer support chats?

To cut hallucinations in support, you need to constrain the model to your own verified content and keep it away from open‑ended guessing. Practically, that means using AU‑specific RAG over your FAQs, policies, product docs and knowledge bases, plus rules like “only answer from provided documents” and automatic fallbacks to a human agent when confidence is low. LYFE AI typically also adds prompt‑level disclaimers, response validation, and logging so you can audit risky outputs if something slips through.

Is GPT‑5 safer than Claude 4 or Gemini for Australian customer service?

Not automatically. GPT‑5‑class models might be more capable, but higher capability can sometimes mean more persuasive hallucinations when the model is uncertain. Claude 4.x is often stronger at cautious, grounded reasoning, and Gemini 3 integrates well with Google’s ecosystem, but their safety depends on how they’re configured. LYFE AI usually runs comparative pilots with your real transcripts and policies to see which model behaves most safely under Australian product, privacy and disclosure rules.

How do hallucinations affect AI‑generated documents like policies, contracts and emails?

In document drafting, hallucinations can show up as invented clauses, outdated legal references, or made‑up internal policies that never existed. For Australian organisations, that can create compliance gaps (e.g. contradicting the Corporations Act, NCCP, or Privacy Act), misleading marketing copy, or inconsistent T&Cs across channels. The safest pattern is to use AI for first drafts, then require human review, tracked changes, and governance workflows before anything reaches customers or regulators.

Can I trust AI citations and references, or do models fake sources?

Modern models can still fabricate citations, case names, and URLs that look plausible but don’t exist. Even when a model provides real links, they may not actually support the specific claim it’s making. LYFE AI tackles this by using retrieval‑based citations (linking only to documents actually retrieved from your AU content), automatic source‑checking, and UI patterns that show what evidence each sentence is based on so your staff can verify high‑risk outputs quickly.

What Australian regulations make AI hallucinations a compliance risk?

Hallucinations can trigger issues under the Australian Consumer Law (misleading and deceptive conduct), ASIC and APRA guidance for financial services, health‑related advertising and TGA rules, and the Privacy Act if personal data is mishandled. Industry codes (like the Banking Code of Practice or Insurance Council guidelines) also expect accurate, clear customer communication. Regulators increasingly view AI outputs as your organisation’s responsibility, so you need controls, audit trails, and documented governance around any AI system that talks to customers or drafts formal documents.

How do local Australian AI models compare to GPT‑5, Claude and Gemini for hallucinations?

Local models (including those hosted within Australia) often lag frontier models on raw capability but can be better controlled, especially when tightly fine‑tuned on your AU‑specific data. They can offer data‑residency benefits and lower latency, and when paired with strong RAG and prompt constraints, their hallucination rate can be competitive for narrow domains like a single bank’s products. LYFE AI often recommends a hybrid approach: frontier models for complex reasoning, local models for sensitive or tightly scoped tasks with strict governance needs.

What practical steps can my Australian organisation take to control AI hallucination risk?

Start by clearly defining high‑risk tasks (e.g. giving financial or medical guidance, making eligibility decisions) and keep AI in an assistive role with mandatory human review there. Implement AU‑specific RAG, guardrails, and model selection; log and monitor AI outputs; and build a feedback loop so staff can flag hallucinations. LYFE AI typically helps clients with an AI risk assessment, pilot deployments, and a governance framework that aligns with their regulators and internal audit requirements.

How can LYFE AI help my business safely deploy AI in Australia?

LYFE AI specialises in designing and implementing AI systems that prioritise low hallucination rates and regulatory compliance for Australian organisations. This includes model benchmarking on your own data, AU‑specific RAG setup, safety and governance controls, and integration into your customer support and document workflows. We also provide ongoing monitoring, tuning and training so your teams understand when to trust AI outputs and when to escalate to humans.