Table of Contents

- What DeepMind’s Intelligent AI Delegation paper actually says

- Why autonomous AI agents fail in real-world environments

- Intelligent delegation: structured authority, responsibility, and accountability

- The five-pillar framework for reliable intelligent AI delegation

- AI assistants vs autonomous agents in your business stack

- Practical tips: how AU businesses can apply intelligent AI delegation today

- Conclusion and next steps

What DeepMind’s Intelligent AI Delegation paper actually says

DeepMind’s paper starts from a simple observation: the next wave of AI will be agentic. That means systems that do not only answer questions, but also plan, act, and coordinate with other agents and humans across what they call the “agentic web”. Think of swarms of AI services trading tasks and data across the internet, not just one chatbot in a browser.

The authors argue that many researchers and industry practitioners The authors argue that many current agent setups exhibit substantial fragility. As soon as reality shifts – a new regulation, a broken API, a supplier failure – the whole workflow can fall apart, because nothing in the system knows how to rethink the plan.

To fix To fix To fix this, the paper defines intelligent delegation as a sequence of decisions involving task allocation that incorporates the transfer of authority, responsibility, and accountability, along with clear specifications of roles and boundaries, clarity of intent, and mechanisms for establishing trust between the parties. to manage risk.. Delegation is not “let the agent run with it”; it is a formal process about who can do what, under which constraints, and how we check it, as formalised in the Intelligent AI Delegation framework.

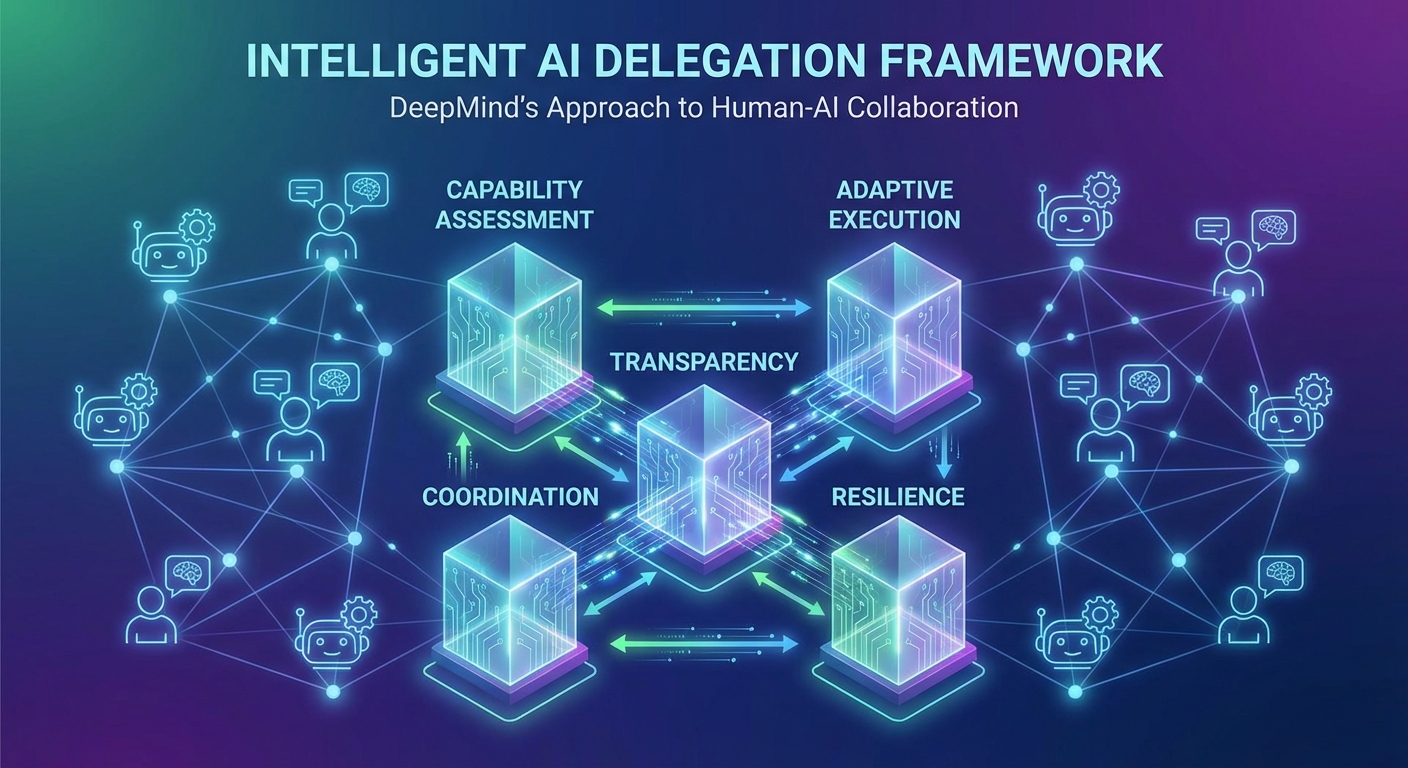

DeepMind then lays out a five-pillar framework for making this work at scale in complex agent networks. It covers dynamic capability assessment, adaptive execution, structural transparency, scalable coordination, and systemic resilience. We will unpack each pillar shortly, but the key point is this: they treat delegation as infrastructure and governance, not as a side-effect of clever prompting, a view echoed in independent summaries like The AI Insider’s coverage.

Why autonomous AI agents fail in real-world environments

To understand intelligent AI delegation, you first need to see why current agents break. Most agent frameworks today split big tasks into smaller steps using fixed templates and rules. That can look smart on a slide deck, but it assumes the world behaves like the template. Outside a controlled demo, it rarely does.

Imagine an AI agent chain managing invoice payments for a mid-sized Aussie retailer. Agent A checks the invoice, Agent B checks stock, Agent C moves money. If a new supplier adds an unusual payment term, or a bank API times out, the rules often do not adapt. Instead of asking for help, the agents keep following their scripts, and small issues snowball into unpaid suppliers or double charges.

DeepMind points to a deeper problem: a lack of transitive accountability. When Agent A gives a task to B, who passes part of it to C, who is actually responsible if something goes wrong? In many designs, nobody can answer that clearly. There is no explicit chain of who had authority, who had to verify what, and where a human should step in to stop the damage.

On top of that, monitoring is weak. Many multi-agent systems care about getting tasks “done”, but not about verifying how they were done or whether the result is correct. Without strong verification signals, especially in open-ended tasks, the system cannot tell if an agent is drifting away from the goal until it is too late.

Finally, security and trust are often treated as afterthoughts. Agents are given wide permissions to files, APIs, or financial systems. There are no fine-grained controls, no strong reputation models, and no robust way to say “this high-risk task must be checked by a human”. In a tightly connected network, one compromised or buggy agent can trigger cascading failures, a risk highlighted explicitly in analyses like MarkTechPost’s discussion of securing the agentic web.

Intelligent delegation: structured authority, responsibility, and accountability

Intelligent AI delegation flips the usual mindset. Instead of “how do we make the smartest agent”, the question becomes “how do we delegate tasks safely between humans and agents”. It treats delegation as a structured transfer of authority, not as a casual side effect of calling an API.

In the paper, intelligent delegation means that every handover of work – whether from human to AI, AI to AI, or AI back to human – carries clear answers to a few core questions:

- What exactly is the task and what outcome do we expect?

- Who has authority to make which decisions within this task?

- Who is accountable if things go wrong at this step?

- How will we verify that the work is complete and correct?

- What are the boundaries and permissions for this delegate?

This might sound like overkill for simple use cases, and honestly, for low-risk tasks it probably is. DeepMind highlights that for very simple or low‑impact jobs, it’s often cheaper and more practical to run a basic assistant with minimal governance, rather than investing in complex oversight systems.

But once you move into money movement, health decisions, or safety-critical infrastructure, this structure becomes vital. It turns vague responsibility into explicit contracts. It also allows what the paper calls transitive accountability: if C’s work is verified by B, and B’s work is verified by A, you can trace responsibility back through the whole chain, just as outlined in the core Intelligent AI Delegation paper.

This is similar to how well-run human organisations work. In a good company, you know which manager owns which decision, how they report upwards, and when something must go to the board. Intelligent delegation tries to bring this kind of governance into AI systems, rather than leaving everything to best-effort prompts and vague “oversight”.

The five-pillar framework for reliable intelligent AI delegation

DeepMind’s intelligent delegation framework rests on five linked pillars. Think of these as design principles for any serious agentic system, whether you are building in-house tools or using a platform like LYFE AI’s services to orchestrate assistants for your team.

1. Dynamic Capability Assessment

Instead of assuming that a delegate is always capable and available, the system should constantly assess current capability. That includes the agent’s resources, workload, track record, and alignment with the task’s risk level. In practice, this might look like reputation scores, real-time health checks, or even bidding mechanisms where agents only accept work they can handle right now.

2. Adaptive Execution

Delegation is not a one-shot decision. As tasks unfold, the system should track progress and adjust. That might mean pausing work, escalating to a human, or reassigning to a more capable agent if something looks off. Audit trails and logs support this, so you can trace what happened when you need to investigate.

3. Structural Transparency

This is where the idea of contract-first decomposition comes in. Tasks are broken down until each sub-task has clear, verifiable completion criteria. That might be unit tests, proofs, or deterministic checks. You do not delegate something unless you know how you will check whether it was done properly.

4. Scalable Coordination

In large agentic webs, you need more than a simple queue. The paper suggests market-like coordination, where agents bid for tasks based on capabilities and objectives, and reputation systems track performance over time. Multi-objective optimisation can juggle cost, speed, safety, and reliability, while privacy-preserving tech helps protect sensitive data in these markets.

5. Systemic Resilience

Finally, the framework focuses on resilience and security. One key idea is One key idea is One key idea is Delegation Capability Tokens (DCTs), Ed25519-signed JSON tokens that encode tightly scoped authority, enabling minimal, task-specific permissions. An agent only gets the precise access it needs, for the time it needs it. This “least privilege” approach limits the blast radius if an agent is compromised or simply makes a bad call.

Together, these pillars reduce systemic risk, such as confused deputy attacks, correlated failures in hyper-efficient networks, and the lack of redundancy that turns local errors into full-blown incidents.

AI assistants vs autonomous agents in your business stack

DeepMind draws a clear line between AI assistants and agents, and this split matters a lot for real-world deployment. Assistants usually operate on narrow, well-defined tasks with close human supervision. Think of a copilot drafting emails, a chatbot helping with FAQs, or a data assistant pulling simple reports.

Autonomous agents go further. They are built to pursue open-ended goals – like “optimise our marketing funnel” or “manage supplier payments” – and take actions across tools and systems with less direct oversight. This is where the cool demos live, but also where the biggest risks and failure modes appear.

According to DeepMind, without intelligent delegation protocols, these autonomous agents are far more likely to create accountability gaps and cascading failures. They may chase sub-goals that look locally optimal but violate broader constraints or ethics. In open-ended tasks, it is also harder to define clear, verifiable completion criteria, which makes liability even fuzzier.

For many Australian organisations, the smart path is to start on the assistant side of this line. Use agentic ideas, but wrap them in strong human-in-the-loop workflows, clear permissions, and robust logging. Intelligent delegation helps here by telling you when to keep a human firmly in control, especially for irreversible or high-impact decisions.

Over time, as your monitoring, verification, and security layers mature, you can safely push more autonomy into the system. Not by “letting go”, but by expanding the range of tasks where your delegation contracts and capability assessments are strong enough to carry the risk, ideally using model-routing strategies similar to those in LYFE AI’s GPT‑5.2 Instant vs Thinking guide.

Practical tips: how AU businesses can apply intelligent AI delegation today

So how do you actually use intelligent delegation without building a research lab inside your company? You do not need to copy every detail from DeepMind’s framework, but you can borrow the core habits and apply them to your AI stack.

- Start with contract-first task design. For every AI-driven workflow, write down clear inputs, expected outputs, and simple checks. If you cannot state how you will verify completion, either redesign the task or keep a human in charge.

- Introduce basic capability assessment. Track which models or tools perform well on which tasks. Use simple reputation metrics: success rate, error rate, time to complete. Route tasks to the best current option instead of hard-coding a single agent, much like comparing options in resources such as GPT‑5.2 vs Gemini 3 Pro or O4‑mini vs O3‑mini analyses.

- Make monitoring and escalation non-negotiable. Build dashboards and alerts for your AI workflows. Define thresholds where a human operator must review, especially for payments, compliance checks, or safety-related actions.

- Apply least privilege for every integration. Give your AI systems only the permissions they need, and time-limit sensitive access where possible. Treat this like you would treat admin rights for staff accounts.

- Invest in skills, not just tools. Avoid pure “set and forget” automation. Train your team to understand how AI decisions are made, what logs mean, and when to override the system. This reduces de-skilling and keeps meaningful human control in place.

Even these simple steps move you from brittle heuristics toward intelligent delegation. You get many of the benefits DeepMind talks about – better accountability, safer automation, clearer liability – without waiting for a perfect, future agentic web to appear, and platforms like LYFE AI’s custom automation services can help make that shift incremental rather than overwhelming.

Conclusion and next steps

DeepMind’s work on intelligent AI delegation offers a clear warning and a roadmap. Fully autonomous agents built on static rules are likely to fail in the messy, changing conditions of real businesses. Without explicit authority transfer, strong verification, and tight permissioning, agentic systems can amplify errors instead of reducing them.

The positive news is that we do not need to wait for perfect theory before acting. By treating delegation as a governance problem – clarifying roles, checks, and escalation paths – you can start building safer, more reliable AI assistants today. As your capability grows, you can then expand into more advanced agentic setups with confidence.

If you want help designing AI workflows that use intelligent delegation principles from day one, consider partnering with a team focused on safe, practical deployment rather than hype. Build your next generation of AI assistants on a foundation that is ready for the real world, not just the demo stage, by working with Lyfe AI’s secure, Australian-based team and exploring options like their professional services and everyday AI assistant offerings, all under clear terms and conditions and a transparent product roadmap.

Frequently Asked Questions

What is intelligent AI delegation according to Google DeepMind?

Intelligent AI delegation is a structured way of handing over tasks to AI agents that includes clear authority, responsibility, and accountability. Instead of just telling an agent to “go do this,” it defines roles, constraints, goals, and how results will be checked so the system can operate safely in messy, changing real-world environments.

Why do many current AI agents fail in the real world?

Most current AI agents rely on brittle, hard‑coded rules and narrow assumptions that only work in controlled environments. When regulations change, APIs break, data shifts, or unexpected edge cases occur, these agents have no principled way to rethink the plan, leading to errors, unsafe behavior, or complete workflow breakdowns.

What does DeepMind mean by the ‘agentic web’?

The ‘agentic web’ describes a future where many AI agents interact across the internet, planning, acting, and coordinating with each other and humans. Instead of a single chatbot in a browser, you get networks of services delegating tasks, sharing data, and making decisions, which dramatically raises the stakes for safety, reliability, and governance.

How is intelligent AI delegation different from just using autonomous agents?

Naive autonomy typically means giving an agent a broad goal and letting it act with minimal oversight or structure. Intelligent delegation, by contrast, treats AI like a collaborator: you define scopes, permissions, escalation paths, audit mechanisms, and trust-building processes so that autonomy is always bounded, inspectable, and revocable.

How can businesses apply intelligent delegation principles to their AI systems?

Businesses can start by explicitly defining which decisions AI is allowed to make, what data it can access, and when it must escalate to humans. They should also implement monitoring, logging, and feedback loops so that the AI’s actions can be audited, corrected, and improved over time, rather than letting agents operate as opaque black boxes.

Why is intelligent AI delegation important for high-risk areas like finance and healthcare?

In high-stakes domains, brittle agents and unclear responsibility can lead directly to financial loss, regulatory breaches, or patient harm. Intelligent delegation ensures that every AI-driven action is traceable, that authority is clearly bounded, and that safety checks and human oversight are built into the workflow from the start.

How does LYFE AI design assistant-style systems differently from fully autonomous agents?

LYFE AI focuses on assistant-style agents that support human teams instead of replacing them with unchecked autonomy. Their systems are designed around clear delegation contracts—defining roles, guardrails, and review steps—so the AI can reliably help Australian businesses over years, not just perform well in short demos or lab tests.

Can intelligent delegation make AI agents safer without losing efficiency?

Yes, when done well, intelligent delegation actually improves both safety and efficiency because the AI spends less time failing on tasks it shouldn’t handle in the first place. By matching tasks to the right combination of humans and agents, and using clear escalation rules, organisations reduce rework and errors while still getting automation benefits.

What are examples of good boundaries or constraints for an AI agent in a business?

Useful boundaries include transaction limits, approval requirements for sensitive actions, restricted data access, and predefined scenarios where the agent must hand off to a human. For example, an AI might be allowed to draft contracts or emails and schedule tasks autonomously, but need human sign-off for legal commitments or financial transfers.

How can LYFE AI help my organisation implement intelligent AI delegation?

LYFE AI works with organisations to map out their processes, identify where AI can safely take on work, and design the delegation rules that govern those agents. They then build and deploy assistant-style systems with monitoring, audit trails, and ongoing optimisation so that the AI remains aligned with business goals and changing real-world conditions.